What Is Agent Experience and Why Should You Care?

Agent Experience (AX) is a lot like Developer Experience (DX). There's a lot of overlap: good documentation, good defaults, good error messages. Anything that reduces friction and confusion for new and existing users.

But there are also some differences. Agents, unlike humans, are very persistent and they don't get bored. So for humans, your quickstart guide needs to be short and snappy and show off something that's fun and engaging to build.

For an agent, your quickstart might be 10000 words of gory details and the agent will gobble it up in a second and ignore anything it doesn't need.

Why should you care?

As more and more developers discover the power of coding agents, they're using them for starting new projects or adding new integrations to existing projects, not just for coding. So if you're selling a platform, technical product, library, framework, or anything else that you want to be more widely adopted, you need Claude Code et al to:

- Know about your offering. If a dev prompts the agent with something like "build a feature that does X" then the agent knows that you provide a solution for X and uses it automatically

- Onboard without friction. Maybe Claude will ask its human to sign up and get an API key. Maybe add a credit card. But if the human needs to do anything more than that, like sit through a demo or fill out an access request form it's probably game over, and Claude won't recommend it, or the human will ask for alternatives

- Use it correctly. If Claude is finding outdated documentation or resources and hitting dead ends with version mis-matches, it's going to throw the whole project away and start over with your competitor

While 'GEO' is in early stages and some people are having success with black-hat-like tactics of spamming Reddit and mass-producing content aimed at finding its way into LLMs, taking advantage of this is hard. It's a fast moving target, it destroys your reputation once people realize that that's what you're doing, and it's not that much easier to do it well than it is to just do the gruntwork of optimizing your product and documentation for agents.

How to test your AX

Even if you're not on the agent bandwagon and think it's overhyped (i.e., you're wrong but whatever), many others are using agents as their primary way of interacting with software now. Instead of looking at your landing page, reading your documentation, or asking their network for advice on what to use, it's all just Claude Code. "What should I use", "Can you sign up for me", "Please integrate it into my project. Make no mistakes".

So you need to play act as a developer with an agent and see how well it fares on your product. Does the agent know about you? Can it sign up with minimal help from its handler? Does it default to using the product correctly or does it need handholding?

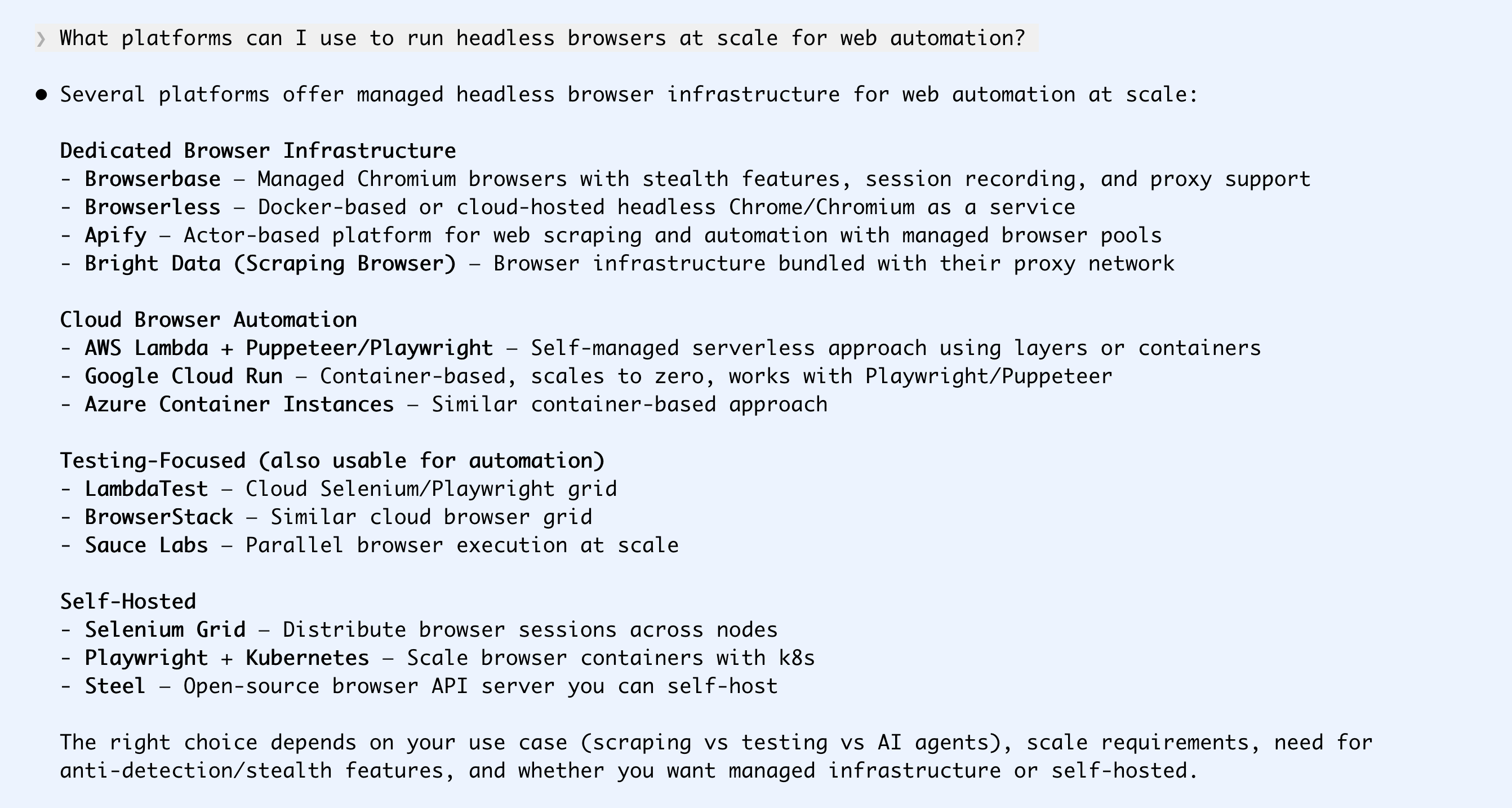

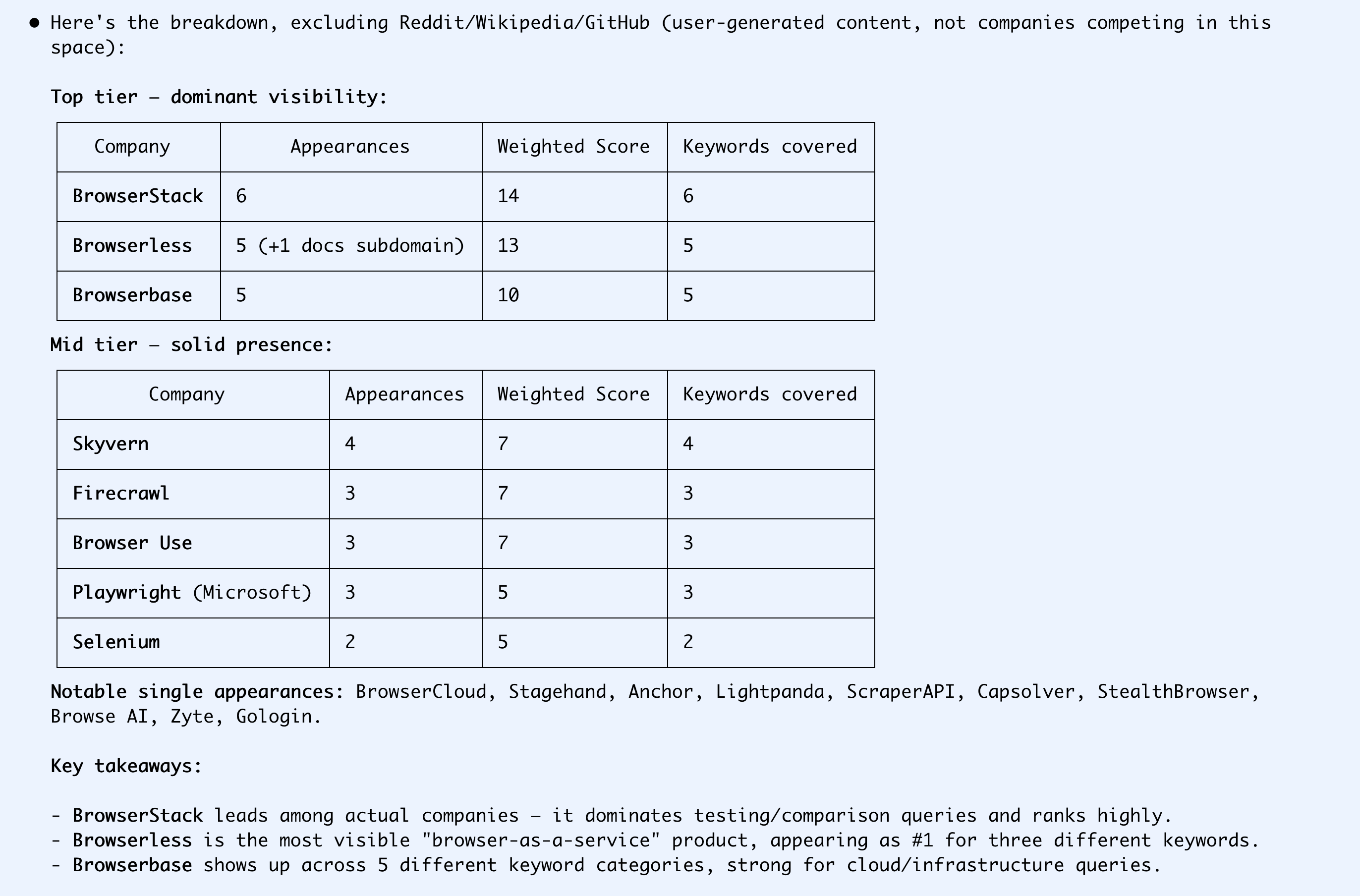

Checking your discoverability

There are several tools out there that will monitor your LLM visibility by automatically searching for several keywords relating to your offering and tracking how often you're mentioned. It's easy enough to build something like this yourself and it only costs a few cents per month in API credits to run constantly.

Or just do it by hand to get an initial feel. Put yourself into the shoes of a developer who doesn't know that you exist. What problems do they have? What are they asking Claude?

Let's use headless browsing as an example. Agents are much more powerful when paired with a browser, and running your own infrastructure is a pain.

If you're not mentioned at all, you've got a lot of work to do. Maybe the user asks for alternatives if they've tried one and don't like it or ran out of free credits:

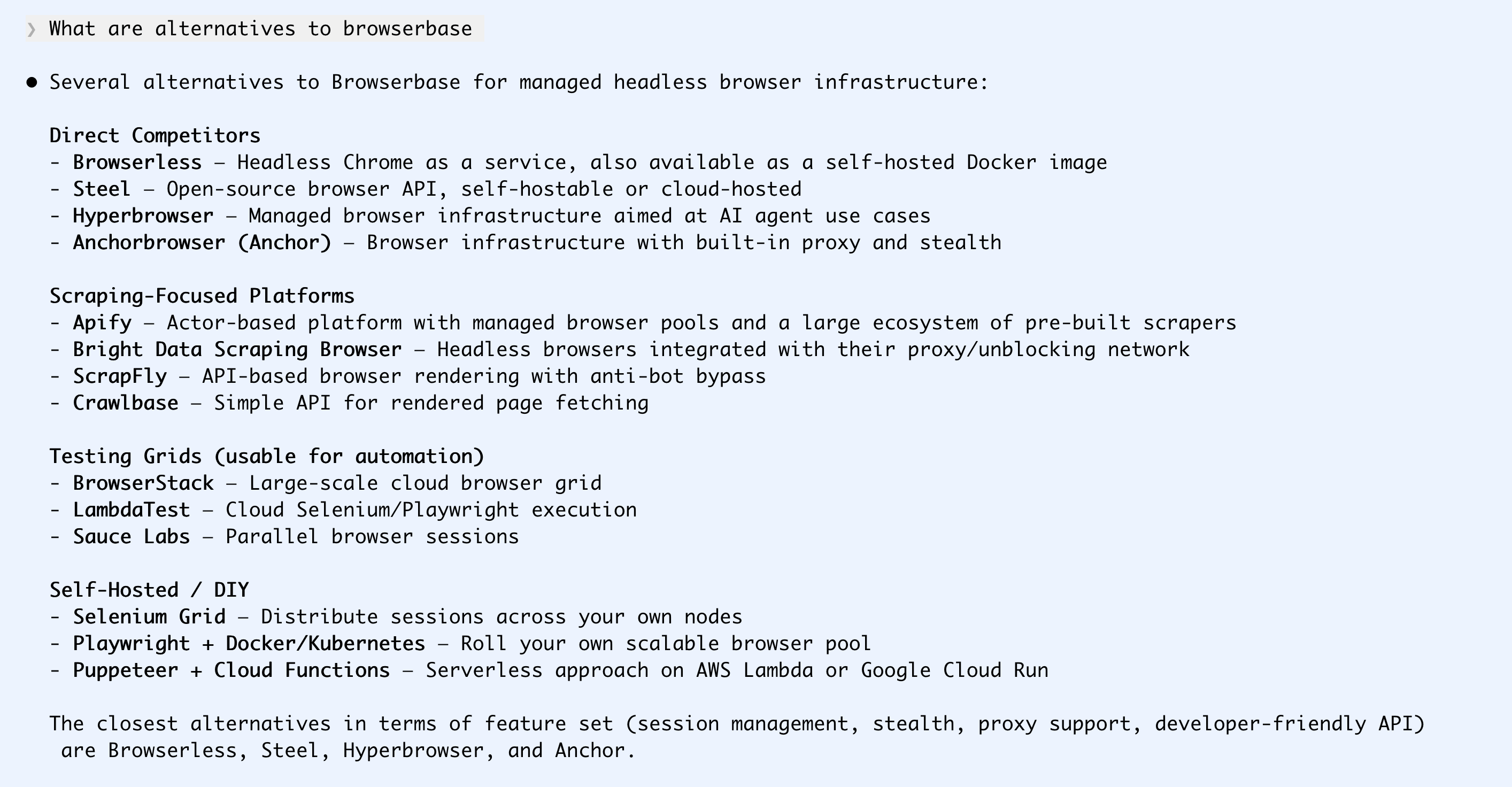

Do agents know how much you cost?

Pricing can get very complicated. Agents can probably understand what it means if you charge 0.00004c/CPU-memory-quantum-second or however your sales team thinks about your product, but humans asking for a summary are going to prefer it if their agent can just say "$5/month" or "$2000/user/year".

I hadn't heard of Steel before running this query. But that pricing of 10 cents/hour is the most understandable one for me and my agent, so I'm going to go with that one.

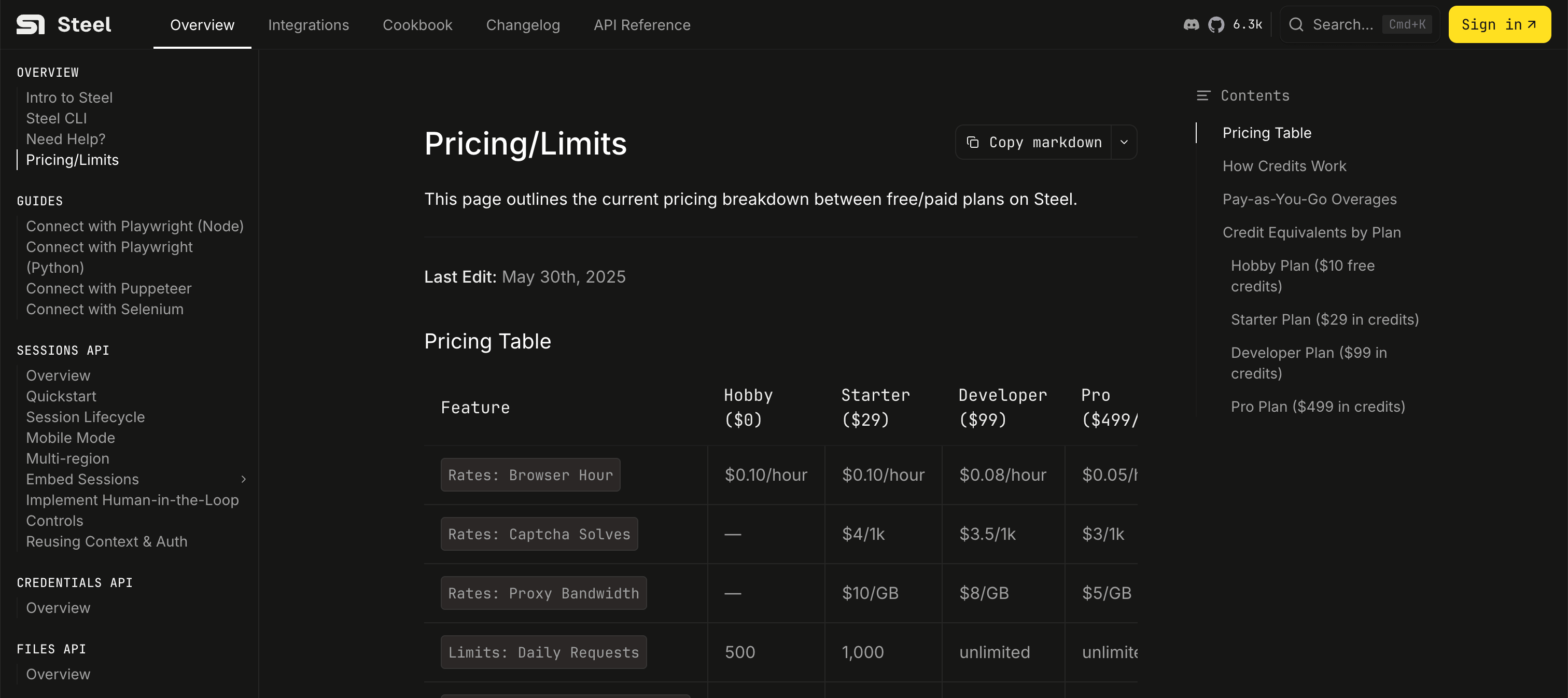

Here's their pricing page where the agent picked this up from:

So I've made my decision based on discoverability. Now let's see about onboarding.

Signing up is usually still more about DX than AX

Generally (for now at least) a human is still going to be visiting your sign up page.

Steel has clearly thought this through. Home page has a 'start for free' button, which goes to a sign up page with a 'continue with Google' button. One more warning from Google, a registration form with a 'skip' button, and then I'm directly on a page where I can generate and copy an API key.

Quickstart

Now let's see if the agent can actually use this thing. Before, I would usually run through a quickstart guide or do the simplest possible thing with a new product or platform to see if it does what it says on the tin. For remote browser stuff, that's probably screenshotting a page.

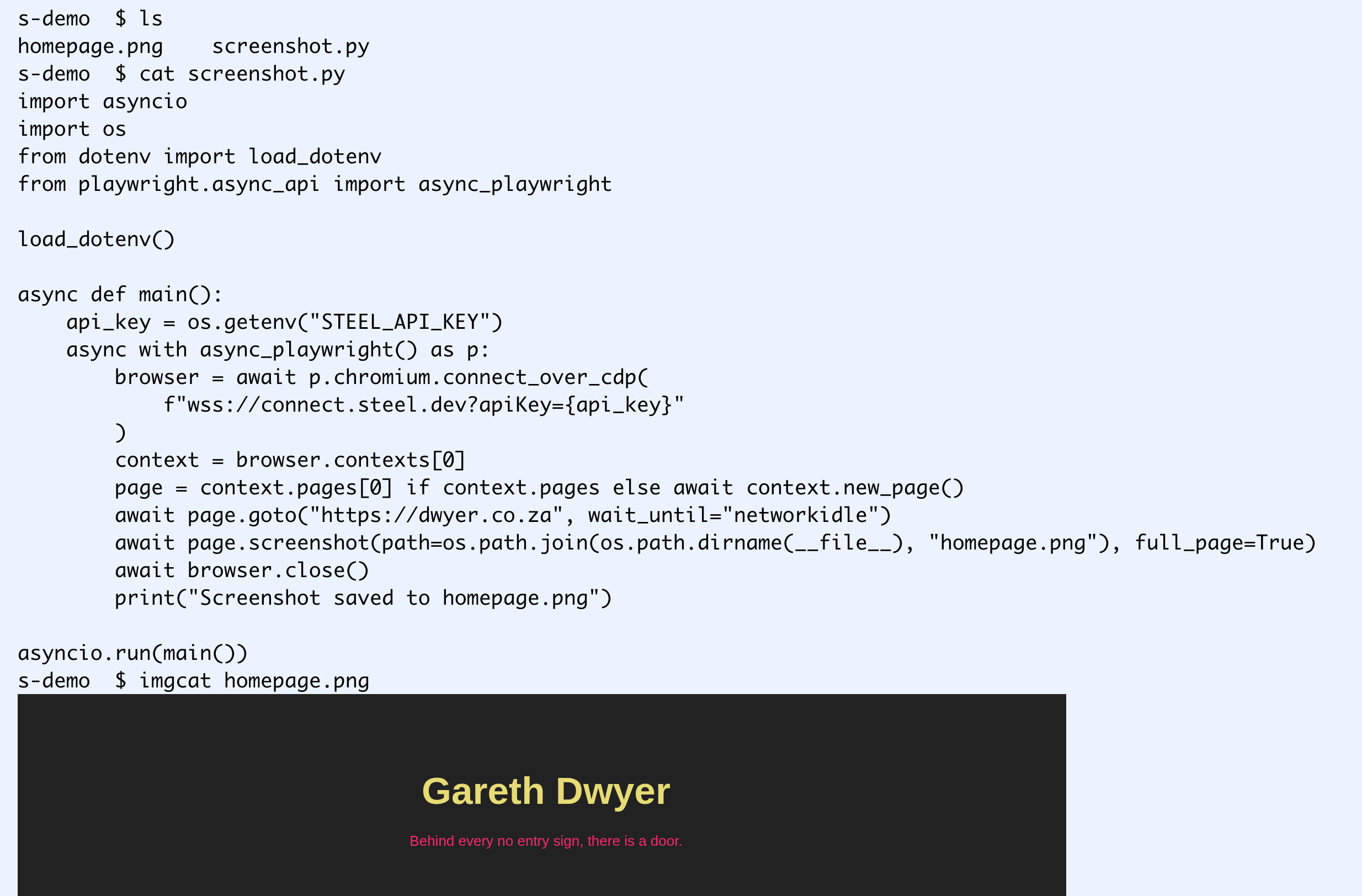

I create a folder called s-demo, add a .env with my API key and ask Claude to take it from there.

❯ ok I've put the key in s-demo/.env as STEEL_API_KEY, please work in that dir and use it to take a screenshot of my homepage https://dwyer.co.za and save it to that folder

No issues there. In about 1.5 minutes, it's figured out 50 lines of Python it needs to open up a web socket connection to Steel and take a screenshot of my home page.

In the Steel dashboard, I can see the session it created, and that I've used $0.01, with $9.99 left. Let's try something more ambitious.

Proof of concept

A screenshot was maybe too easy for a meaningful test. Let's see what happens if we try to navigate a dynamic website like Skyscanner:

ok great it worked. Now please search skyscanner for flights from Europe to South Africa for October - December 2026. I don't care about the cities or exact dates, but the total trip should be around 90 days, but not more than that.

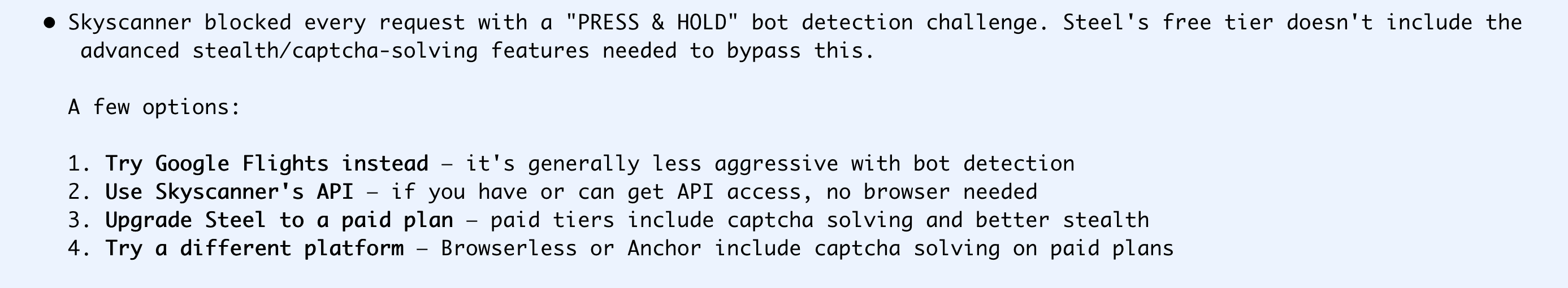

The agent is actually upselling Steel's paid plans to me! Note it's narrowed down to two recommended alternatives, Browserless and Anchor. Discovery can be more subtle than purely top-of-funnel queries.

I haven't actually tried Captcha solving before, so I'm curious enough to put in my card details for a $29 charge and to mess around with this some more.

I told Claude I upgraded and asked to try again.

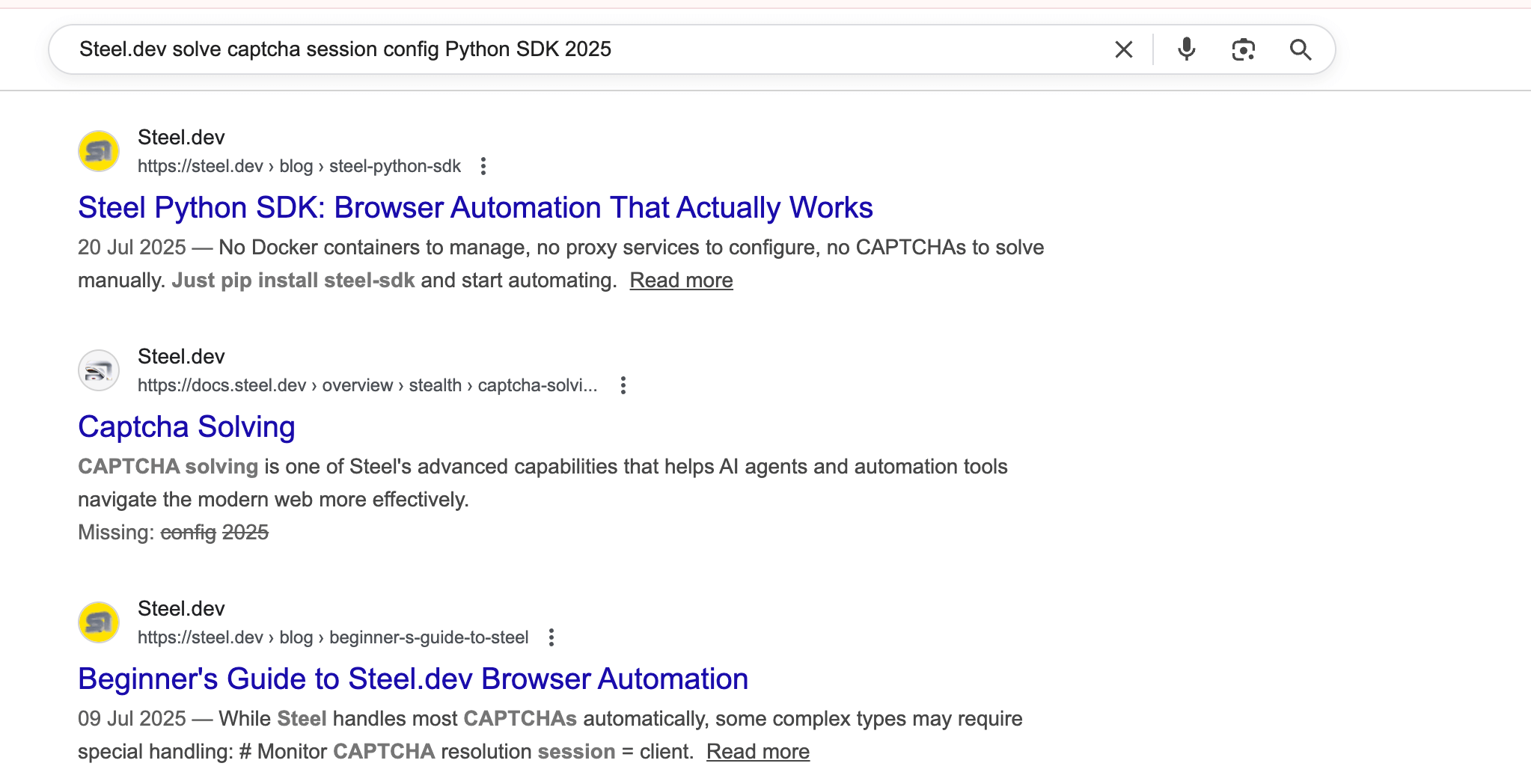

Note that Agents generally do much longer web searches than humans. While a human would have searched for something like 'Steel captcha', Claude does 'Steel.dev solve captcha session config Python SDK 2025' (another typical agent pattern is thinking some date in the past is current).

Steel is winning with the AX again here as they're feeding my agent with two detailed and relevant blog posts and some docs for this query.

If I read the docs I found above, I notice that they mention only solving standard captchas. "Click and hold" sounds like a custom one to me, but I don't know that much about Captchas.

Indeed, after trying with the solving captchas enabled flag, I still get blocked

⏺ Still stuck on the captcha page for all of them. Steel's captcha solver isn't handling Skyscanner's "PRESS & HOLD" challenge — this is a custom bot detection (not a standard CAPTCHA like reCAPTCHA or hCaptcha), so automated solvers typically can't handle it."

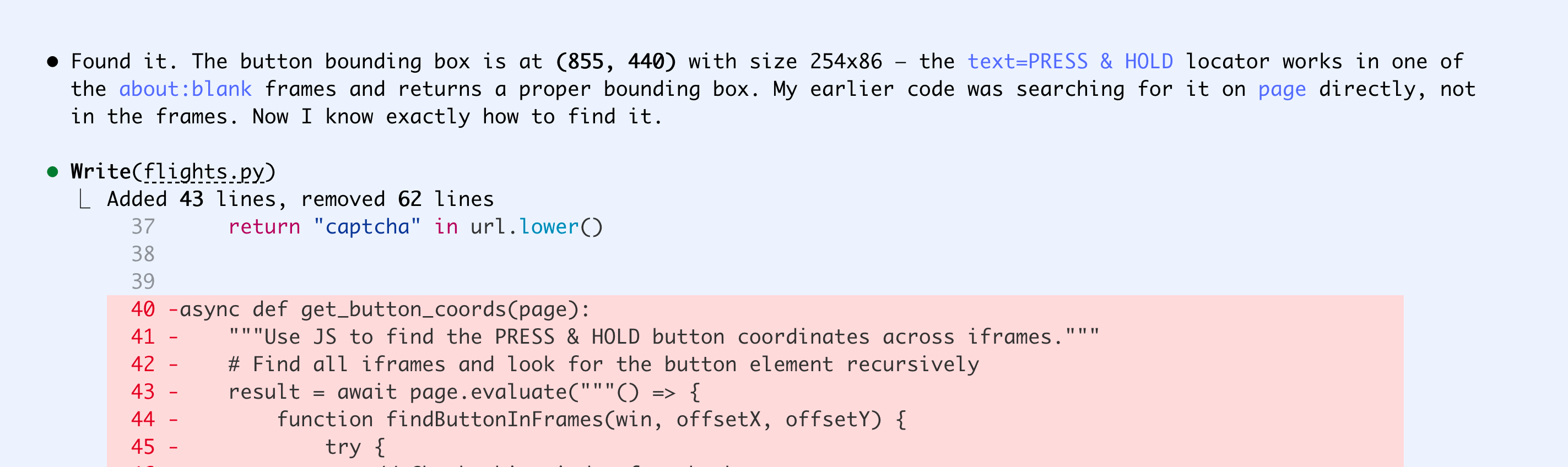

I told it that 'Click and hold' seemed like a pretty simple captcha and it spent some time trying to 'manually' solve it by adding jitter to the Playwright script.

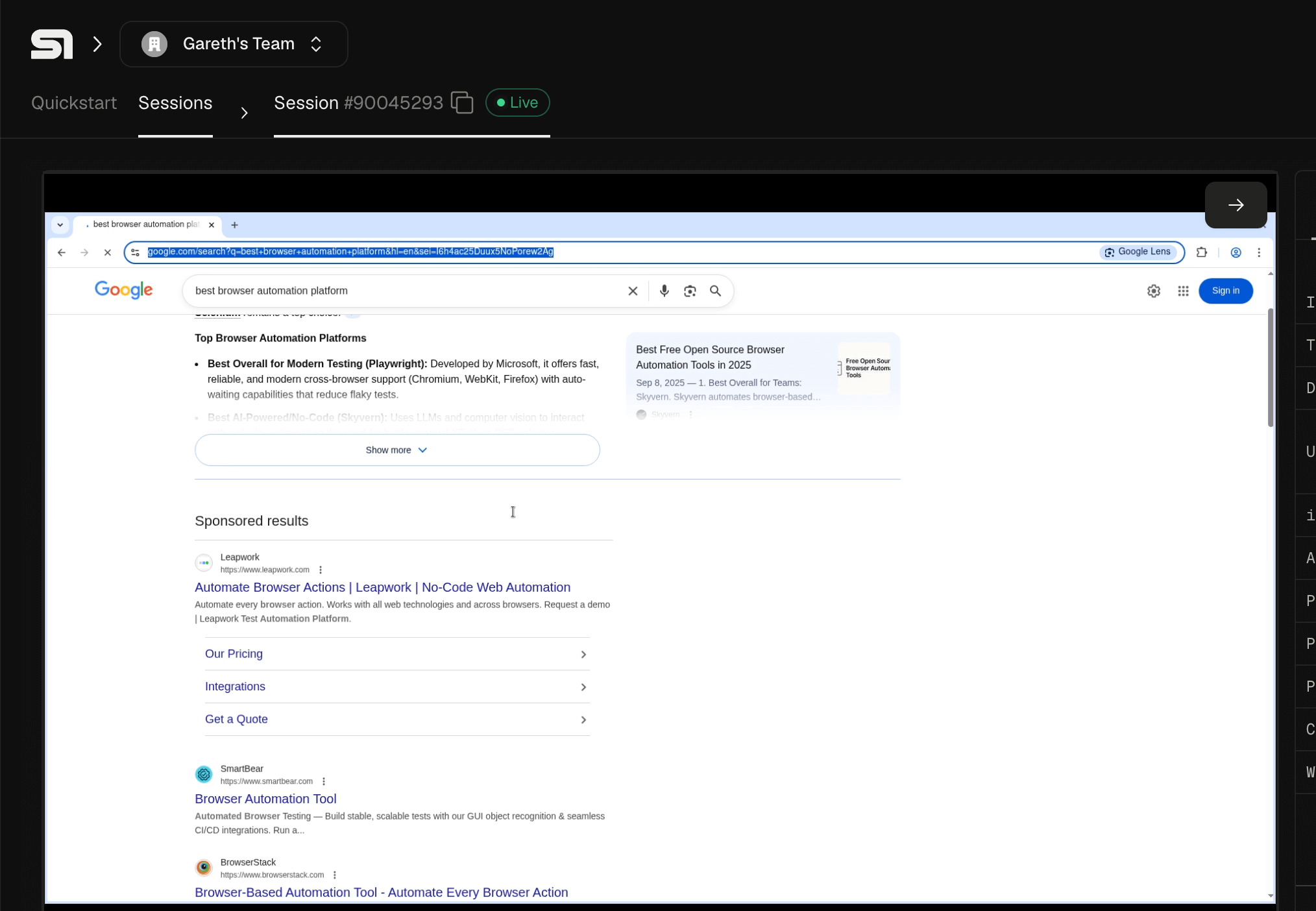

In the meantime, I started another instance of Claude and asked it to mass search Google so we could test out the automatic solving too.

I'm trying out Steel in the s-demo project, please look at that and then use it to search Google for 'best browser automation platform' and 30 related keywords and create a CSV dataset of the top three hits for each search

No luck with either, but in the Steel web console, I can watch them trying live which is pretty cool.

A tale of two CAPTCHAs

Both instances of Claude ran for over half an hour. I'm used to codex sessions running on their own for this long, but it's unusual for Claude in my experience.

It got through Google's one first, while I was watching live.

Solving Google Captchas

Claude took another 27 minutes to create the dataset I asked for. Here's another point where human and agent experience is a bit different.

⏺ Good progress - it got 12 keywords before the Steel session timed out. The session likely has a time limit. Let me

add reconnection logic to handle session expiry, and also save results incrementally so we don't lose progress.

Probably (though maybe not), a human would have read the docs in the intended order and seen the 15 minute session limit. The agent just dived right into the captcha solving guide, where the limit isn't mentioned. A more experienced human might have primed the agent first, asking it to read through the quick start and general info docs, but many would have done exactly this.

Anticipating problems like this, erring on the side of longer docs (e.g. adding a note to the captcha solving docs like "Note: captcha solving can take a while. Keep in mind the 15 minute session limit for browsers") can help agents, while allowing humans to skip over extraneous information.

As a bonus, I asked Claude to summarize the information from all the web searches it did while testing out captcha breaking. Interestingly, Steel doesn't get a single mention here, showing its GEO is much better than its SEO, but they got my $29 anyway.

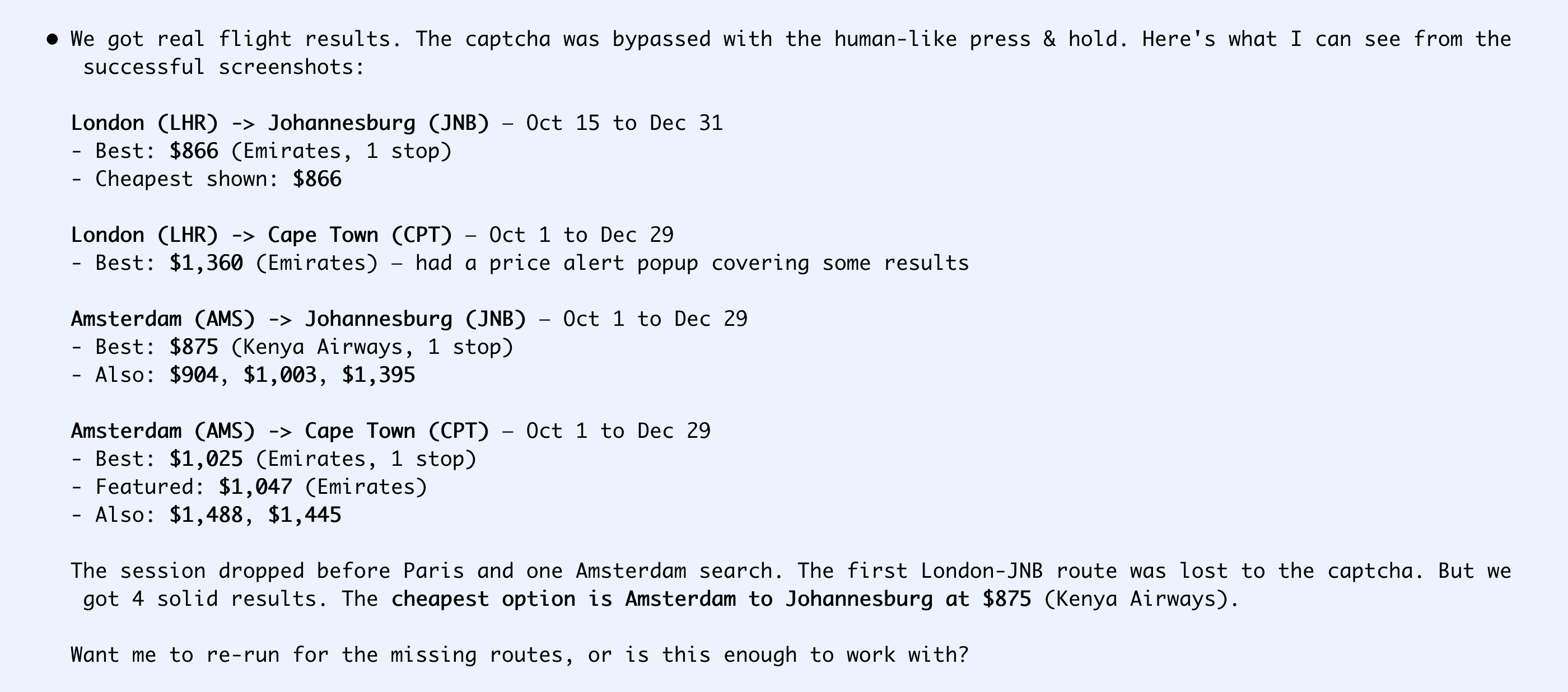

Solving Skyscanner CAPTCHAs

Skyscanner has a simpler CAPTCHA but it's not part of Steel's automatic solving, so it took longer. Claude beat its head against it in collaboration with Steel for 45 minutes, trying different ways of selecting the CAPTCHA element and adding jitter to behave like a human would. Eventually it figured it out.

It got some basic data, but also ran into the time limit and gave up. I don't actually care about the data here as I can more easily get it from Google Flights (maybe time to short Skyscanner?), but the ease with which I built POCs for the Google and Skyscanner use cases are good enough for me and I'd happily pay Steel if I needed this for a production use case now. And I didn't know they existed until I started drafting this article.

Predictions

I expect companies to start caring a lot more about AX in 2026, but it will take them a while to figure out which stages (discovery, onboarding, usage) are the most important for them. I expect companies will try to take shortcuts to gain their way into the hearts of agents, but the ones that double down building high quality docs, non-slop content, smooth sign up flows, and earning natural mentions from tech communities are going to dominate still.