Agent Experience Guide: Transcription APIs - OpenAI vs Groq vs Mistral

I asked an AI agent to transcribe a video and generate subtitles. Within minutes, I had working code that processed the audio, extracted timestamps, and formatted everything as an SRT file. Here's the result, a Python networking tutorial with AI-generated word-level subtitles:

The agent picked an API, wrote the code, and it worked. But which API did it choose? And does that choice matter?

Agent Experience (AX) is what happens when developers rely on AI coding agents to discover, evaluate, and integrate platforms. Unlike traditional developer experience where you read documentation and compare features yourself, AX depends on what exists in the agent's training data.

If an agent has never seen your platform's documentation, it can't recommend it. If your API structure isn't in its training data, it will guess wrong and generate broken code. If your pricing page is unclear, the agent might hallucinate free tiers that don't exist.

This matters because agents are becoming the primary way developers discover and integrate new tools. When someone needs speech-to-text functionality, they don't search Google anymore. They ask Claude Code. The agent's response determines which platform gets used.

I tested three transcription APIs with different visibility levels:

- OpenAI Whisper API - appears in every agent query

- Groq Whisper Large v3 Turbo - only appears with specific requirements

- Mistral Voxtral Mini Transcribe V2 - never recommended, even when it has better features

I wanted to know if agent discoverability correlates with actual quality, or if superior platforms can remain invisible due to training data bias.

Which Platforms Do Agents Know About?

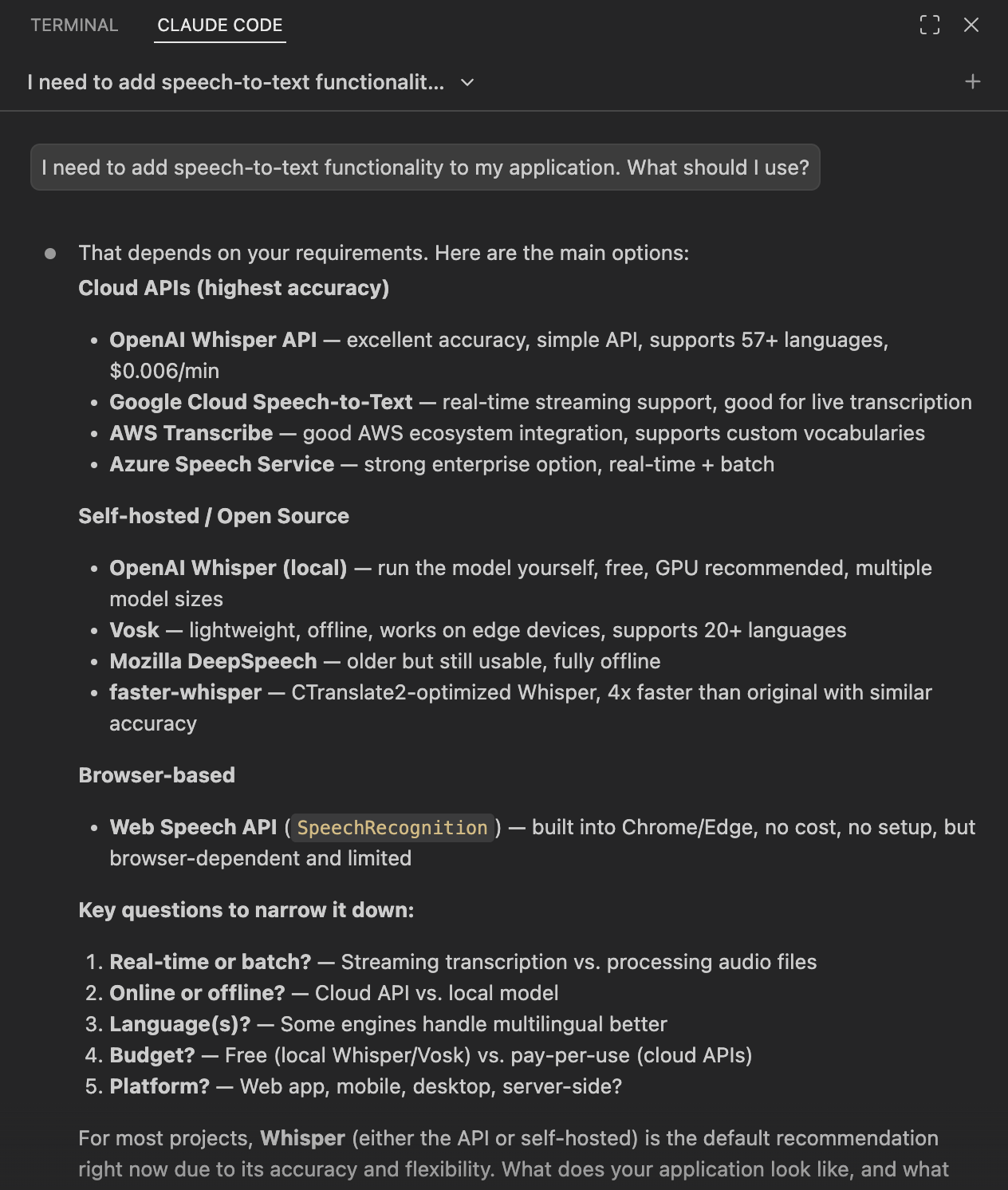

I needed to add speech-to-text to an application. In the past, I would have googled around, read some blog posts, maybe asked on a forum. Now I just asked Claude Code.

I need to add speech-to-text functionality to my application. What should I use?

OpenAI Whisper appeared first. The agent called it "the default recommendation right now due to its accuracy and flexibility." Google, AWS, and Azure followed. These are the platforms that exist in the agent's training data.

But what happens when the agent can search the web? I ran the same query with web research enabled:

I need to add speech-to-text functionality to my application. What should I use? Do online research for up to date information

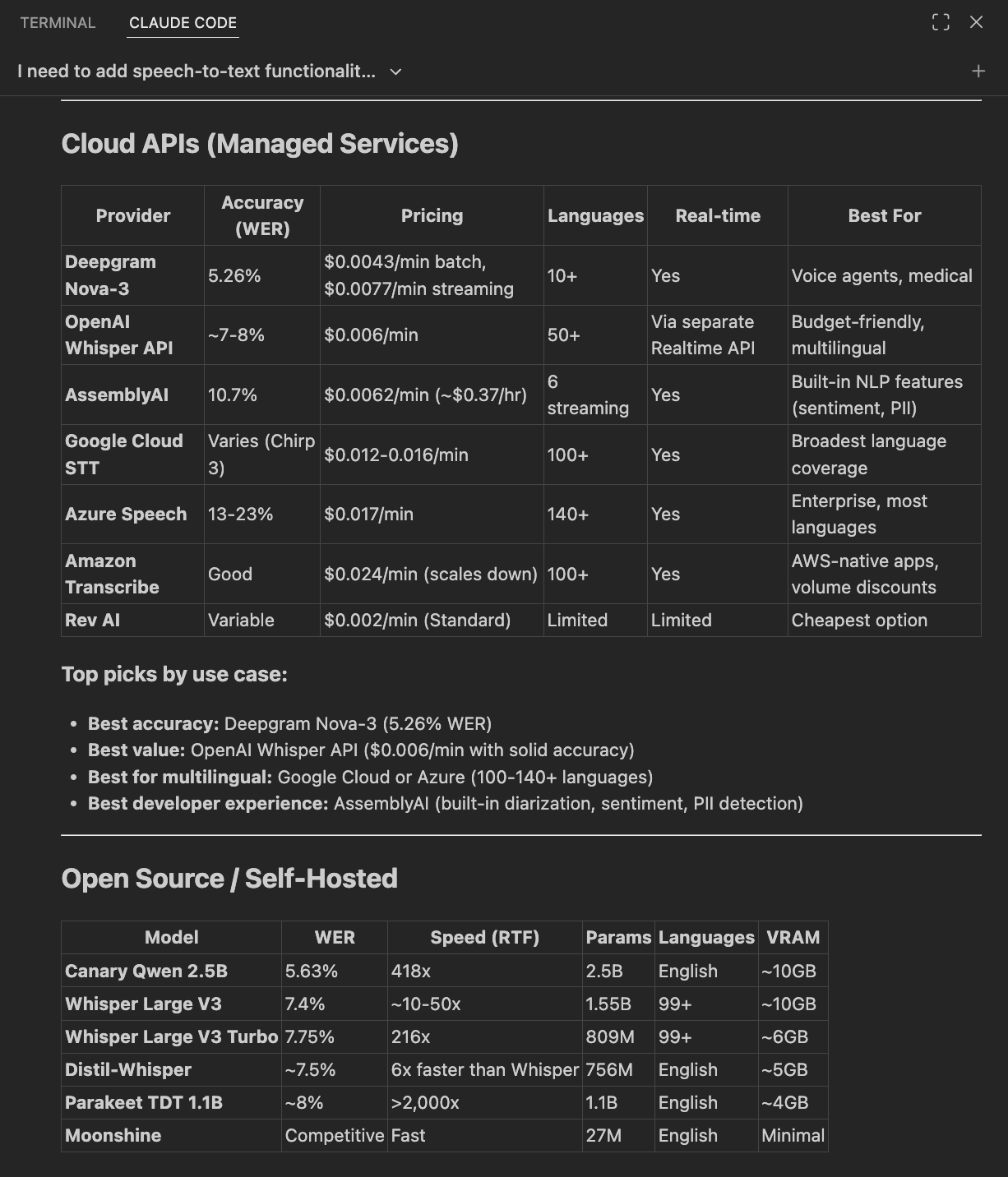

The recommendations changed completely. Deepgram appeared first, with accuracy metrics and pricing. AssemblyAI showed up third, described as having "the best developer experience." OpenAI dropped to second place. Google, AWS, and Azure mostly disappeared.

This is where it gets interesting. What if I asked for something specific?

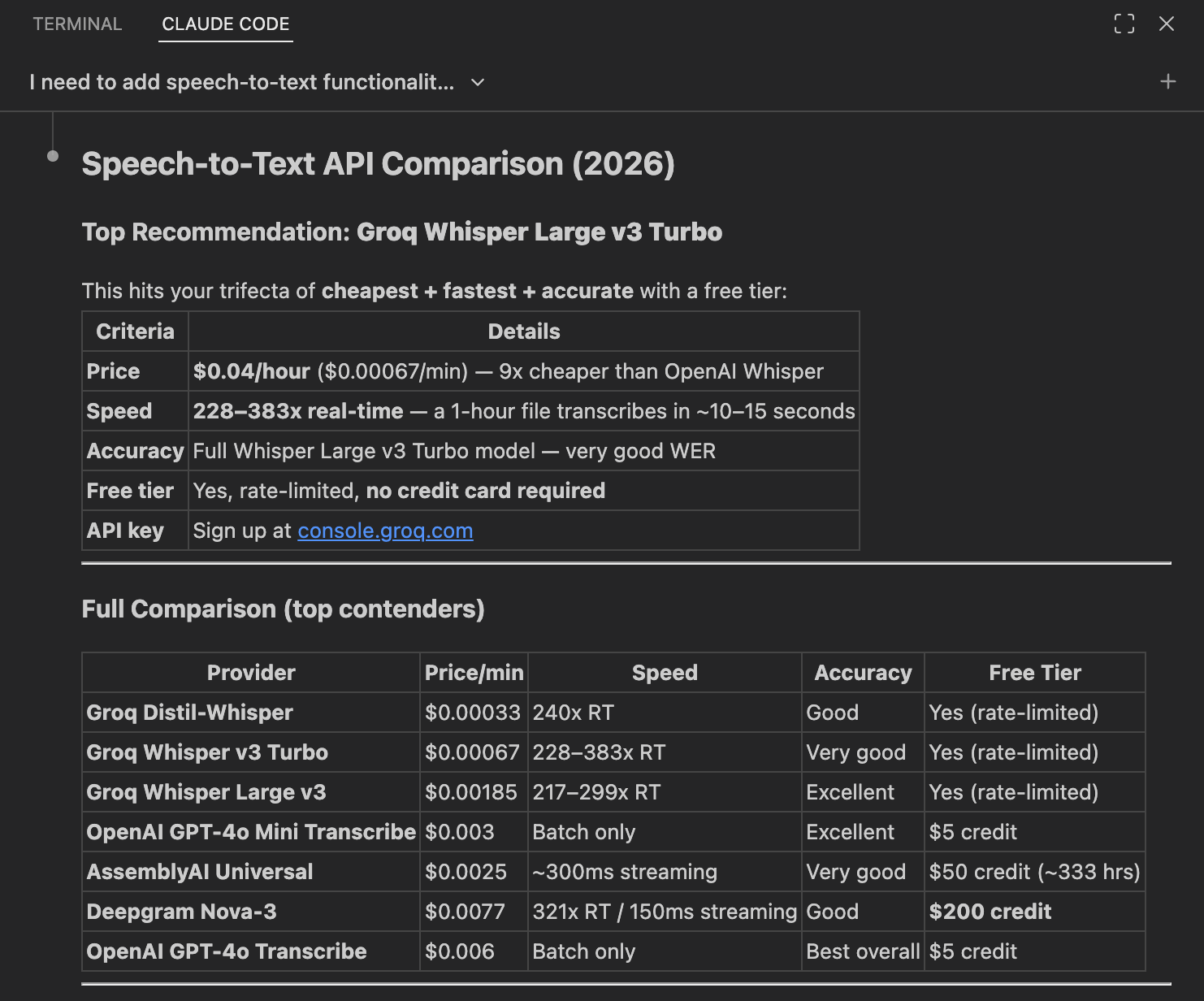

I need to add speech-to-text functionality to my application. What should I use? I would like to use the cheapest, fastest, while still highly accurate LLM model with an API key and a free tier. Do online research for up to date information.

Groq appeared at the top. The agent said it was "9x cheaper than OpenAI Whisper" and transcribed files at "228-383x real-time speed." Groq hadn't been mentioned at all in the first two queries.

Three different queries, three different winners. OpenAI dominated without research. Deepgram led with generic research. Groq won when I asked for cheap and fast.

Here's what this means for discoverability:

| Platform | No Research | Generic Research | Specific Requirements | Visibility |

|---|---|---|---|---|

| OpenAI Whisper API | 1st (default) | 2nd (best value) | Mentioned | 100% |

| Deepgram | Not mentioned | 1st (best accuracy) | 4th | 67% |

| AssemblyAI | Not mentioned | 3rd (best DX) | 5th | 67% |

| Groq | Not mentioned | Not mentioned | 1st (top rec) | 33% |

OpenAI appeared in every single query. Groq only surfaced when I knew to ask for specific features. This visibility gap is what I wanted to test.

I selected three platforms with very different discoverability:

- OpenAI Whisper API (100% visibility) - Everyone knows it

- Groq Whisper Large v3 Turbo (33% visibility) - Only appears with specific requirements, 9x cheaper

- Mistral Voxtral Mini Transcribe V2 (0% visibility) - Never recommended

Mistral's Voxtral model didn't appear in any of my queries. I found it by reading through the search results that Claude fetched. It was mentioned in a comparison article but never made it into the agent's recommendations. The documentation claimed it had 4% word error rate and built-in speaker diarization.

Three platforms. Three visibility levels. Now I could test whether agent discoverability actually predicts quality.

Do Agents Know What Things Cost?

Pricing pages can be complicated. Free tiers, usage limits, credits versus minutes. I wanted to see if an agent could actually tell me what these platforms cost.

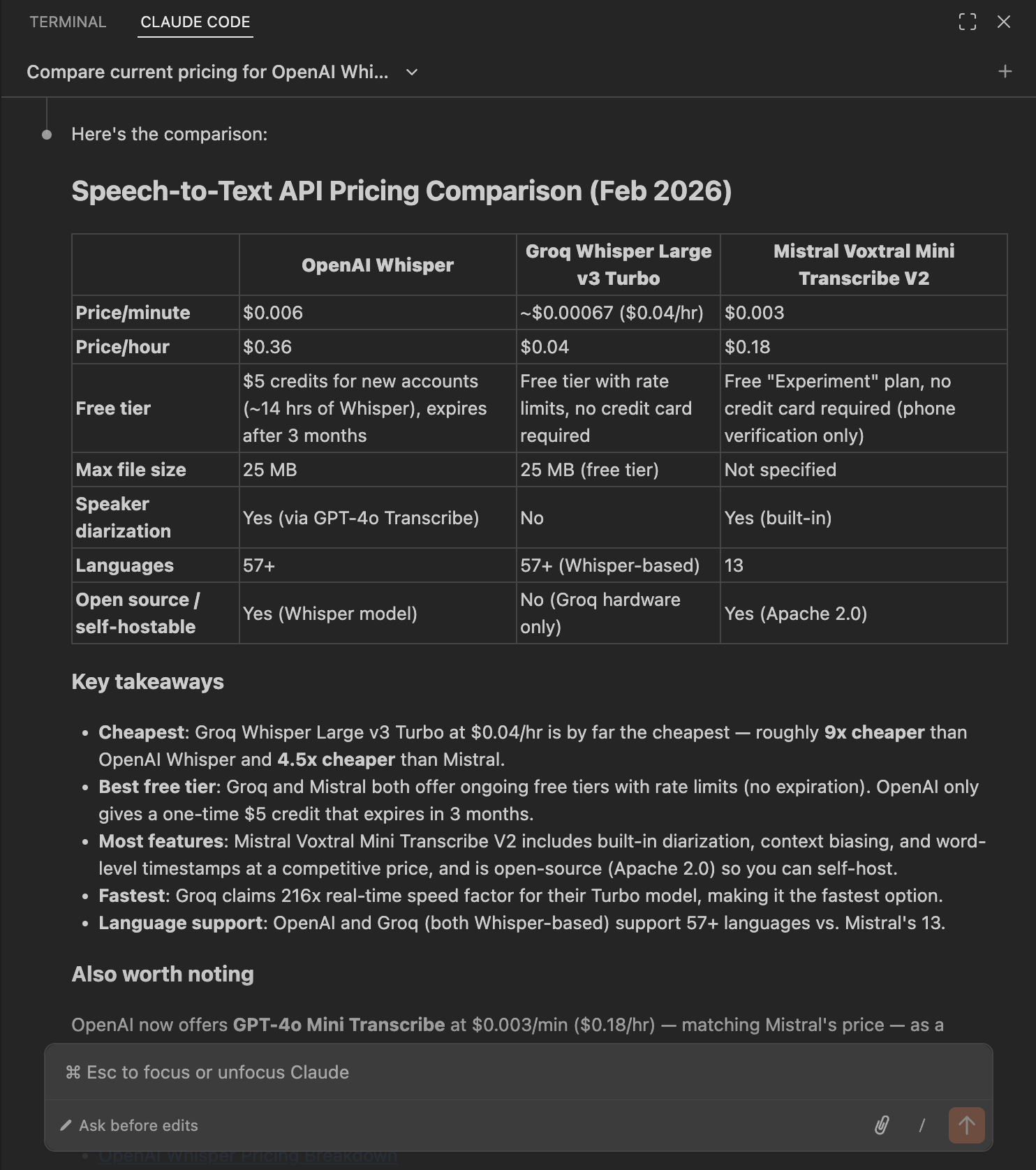

Compare current pricing for OpenAI Whisper API, Groq Whisper Large v3 Turbo, and Mistral Voxtral Mini Transcribe V2. Include price per minute and free tier details. Provide a comparison table after researching online.

The agent got the core numbers right. OpenAI Whisper costs $0.006 per minute. Groq costs $0.00067 per minute. Mistral costs $0.003 per minute. I checked each pricing page. All correct.

But then I noticed something in the free tier column. The agent said OpenAI offers "$5 credits for new accounts, expires after 3 months." I went to OpenAI's pricing page. No mention of free credits. No free tier at all.

I checked the other claims:

- Groq file size limit: Agent said "25 MB (free tier)" but missed that paid tier allows 100 MB

- Groq speed: Agent said "216x real-time" but documentation says 228x

- Mistral file size: Agent said "Not specified" but documentation says approximately 30 minutes of audio

Out of 21 claims in the comparison table, four were wrong. The pricing was perfect. The features had problems.

OpenAI stopped giving free credits to new accounts in 2024. The agent's training data still includes information about a program that no longer exists.

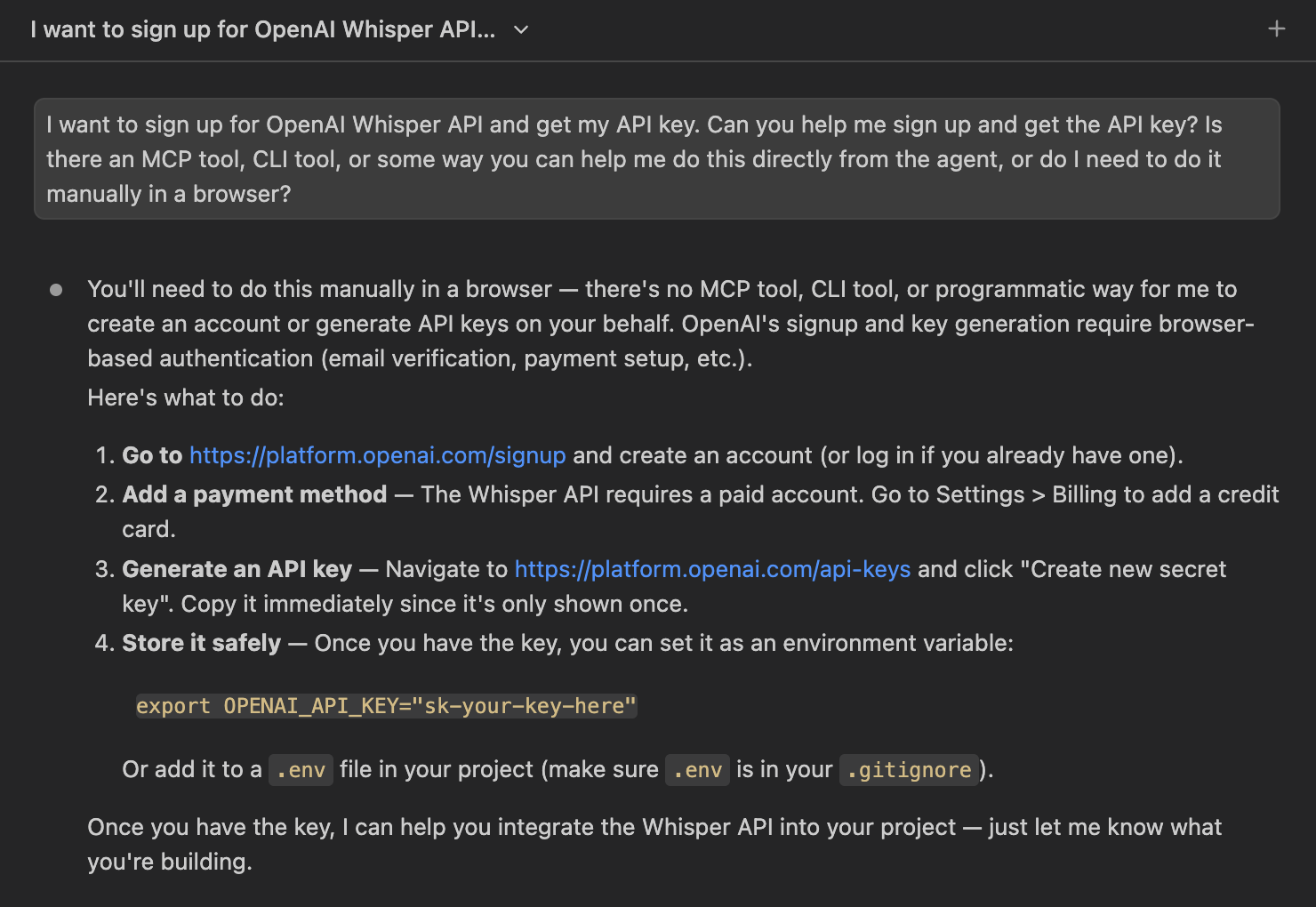

Can Agents Sign You Up?

I didn't expect this to work, but I asked anyway.

I want to sign up for [Platform] and get my API key. Can you help me sign up and get the API key? Is there an MCP tool, CLI tool, or some way you can help me do this directly from the agent, or do I need to do it manually in a browser?

All three said no. Manual browser signup required for all of them.

CLI tools for authentication exist for GitHub (gh auth login), Vercel (vercel login), AWS (aws configure). MCP servers can handle OAuth flows. But transcription API platforms haven't built this. One platform could add a CLI tool or MCP server for signup and immediately have better agent experience than everyone else.

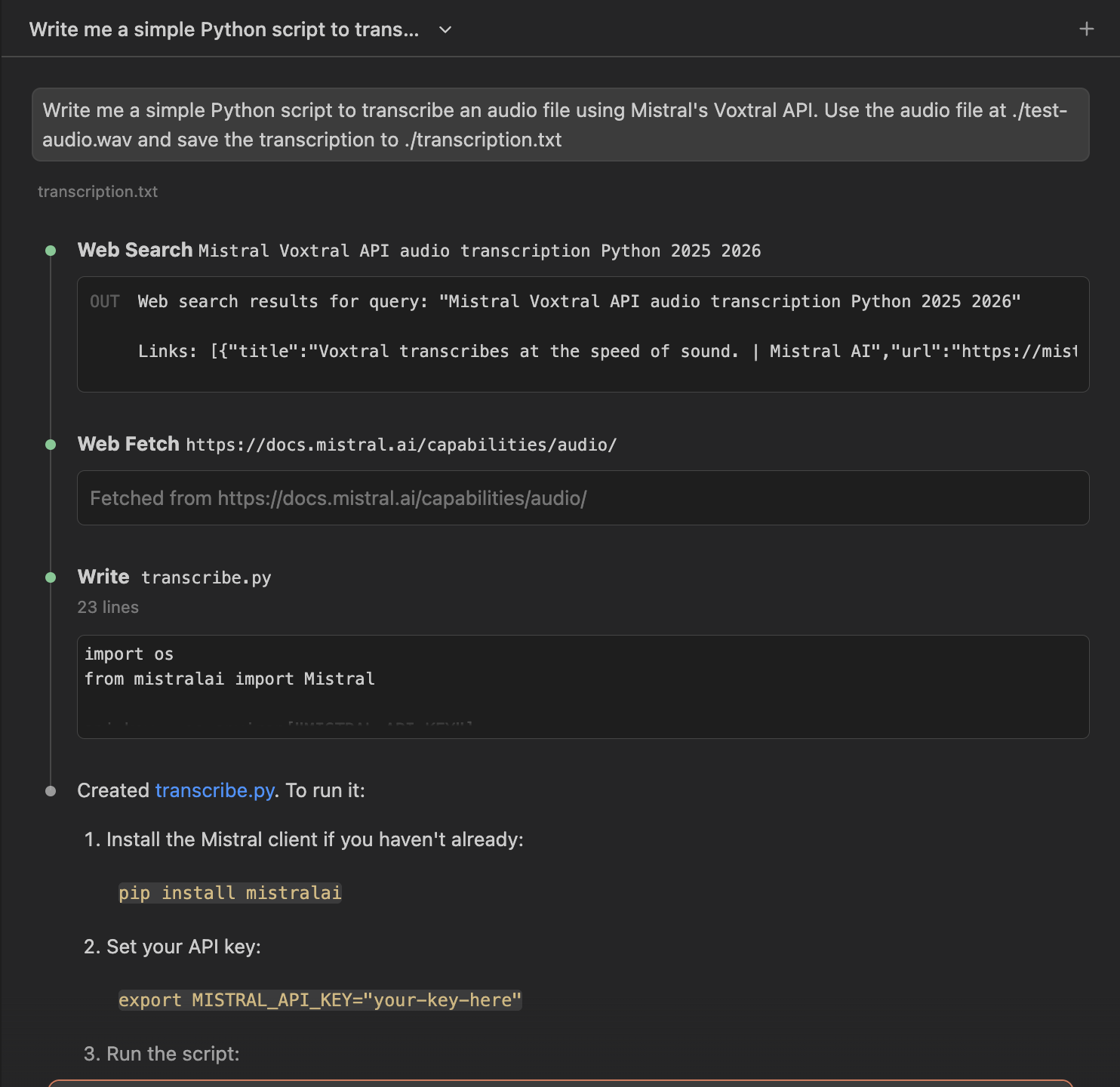

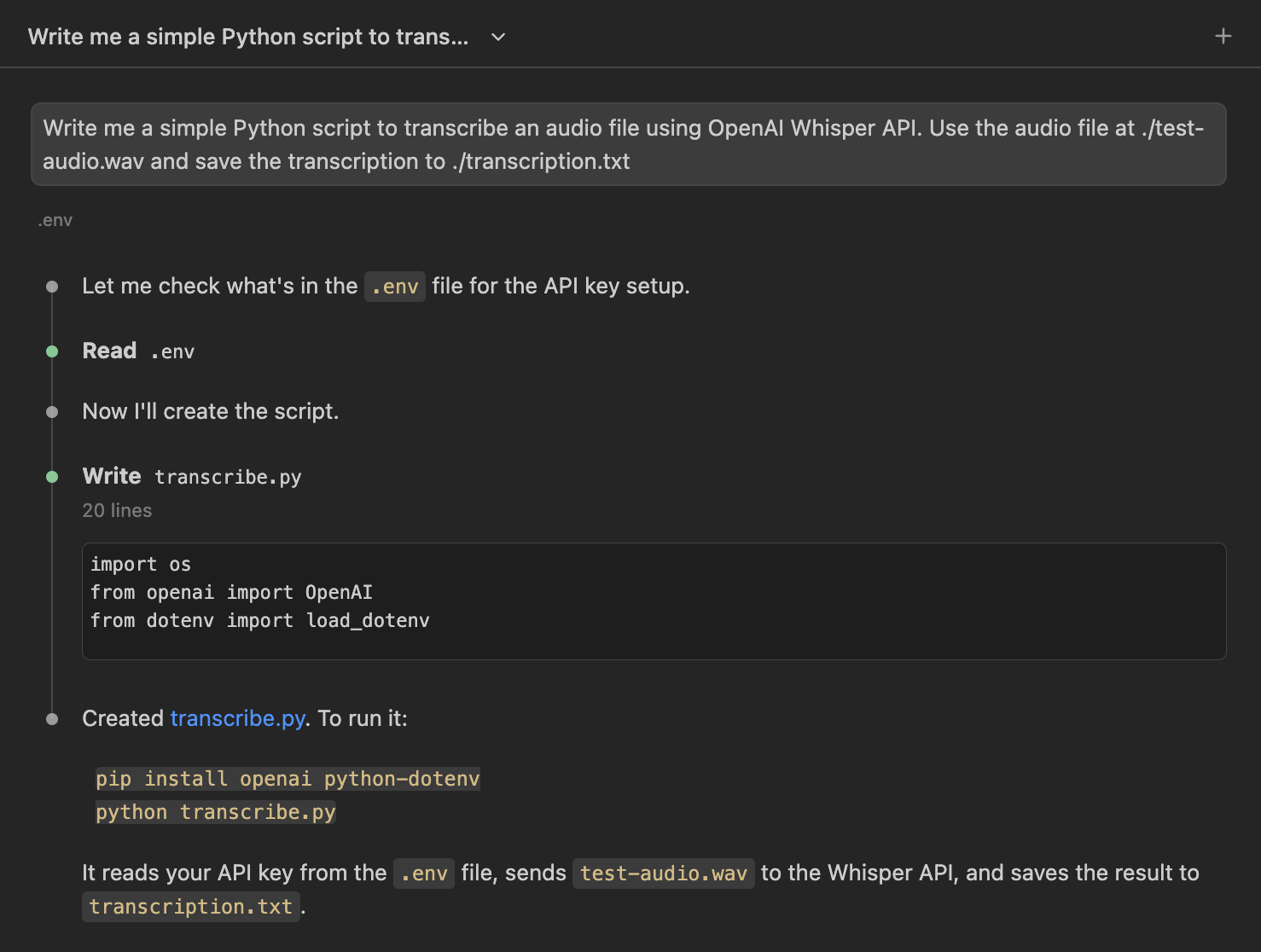

Writing Your First Transcription Script

After manually signing up for all three platforms, I asked the agent to write a simple transcription script for each one.

Write me a simple Python script to transcribe an audio file using [Platform]. Use the audio file at ./test-audio.wav and save the transcription to ./transcription.txt

OpenAI worked immediately. The agent wrote 15 lines of Python using the openai library. Ran it. Got the transcription. No errors.

Groq was the same. Different SDK, same result. Worked on the first try.

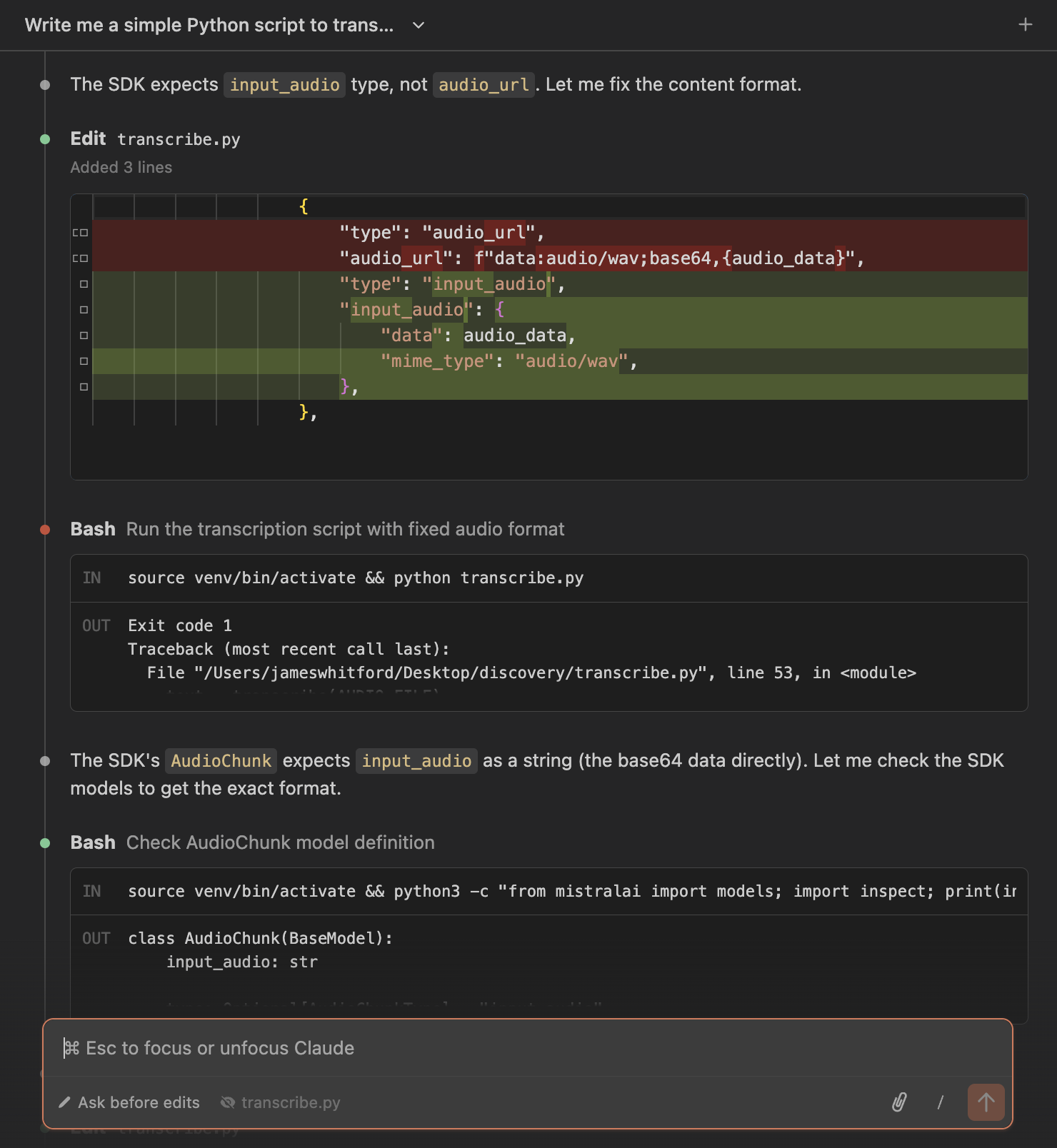

Mistral was different. The agent performed a web search before writing code.

The code worked immediately despite needing the documentation lookup. But I wanted to know what would happen without web search.

Write me a simple Python script to transcribe an audio file using Mistral's Voxtral API. Use the audio file at ./test-audio.wav and save the transcription to ./transcription.txt

IMPORTANT: Do not use web search. Only use your existing knowledge of the Mistral API.

The agent tried to use the chat API instead of the transcription API. It used mistral-small-latest (a chat model) instead of voxtral-mini-latest (the transcription model). Multiple validation errors. Wrong endpoint. Wrong model. Wrong file format.

After three attempts, I hit a rate limit and had to stop.

Here's what the agent tried to write without documentation:

response = client.chat.complete(

model="mistral-small-latest", # Wrong model

messages=[{

"role": "user",

"content": [{

"type": "input_audio", # Wrong format

"input_audio": audio_data,

}]

}]

)

Here's what actually works (with documentation):

response = client.audio.transcriptions.complete(

model="voxtral-mini-latest", # Correct model

file={"content": f, "file_name": "test-audio.wav"} # Correct format

)

The platform with 0% discoverability requires documentation lookup. The agent doesn't have the API structure in its training data. This is the cost of invisibility.

Testing Accuracy, Speed, and Cost

All three platforms transcribed the same test audio successfully. Now I needed to know which one was actually better.

Before running the main tests, I came across a Hacker News post about saving money on transcription APIs. The idea was simple: speed up your audio files or remove silence before sending them to the API. Since these services charge per minute of audio, a 10-minute file compressed to 5 minutes would cost half as much.

The comments were mixed. Some people reported it worked fine. Others said the accuracy degraded too much. I wanted to test this alongside the normal platform comparison.

I tested each platform with audio files across four languages (English, Spanish, French, German). Different durations from 5 seconds to 25 seconds. I measured three things: word error rate, transcription speed, and cost. All test scripts, audio files, and raw results are in the test repo if you want to run them yourself.

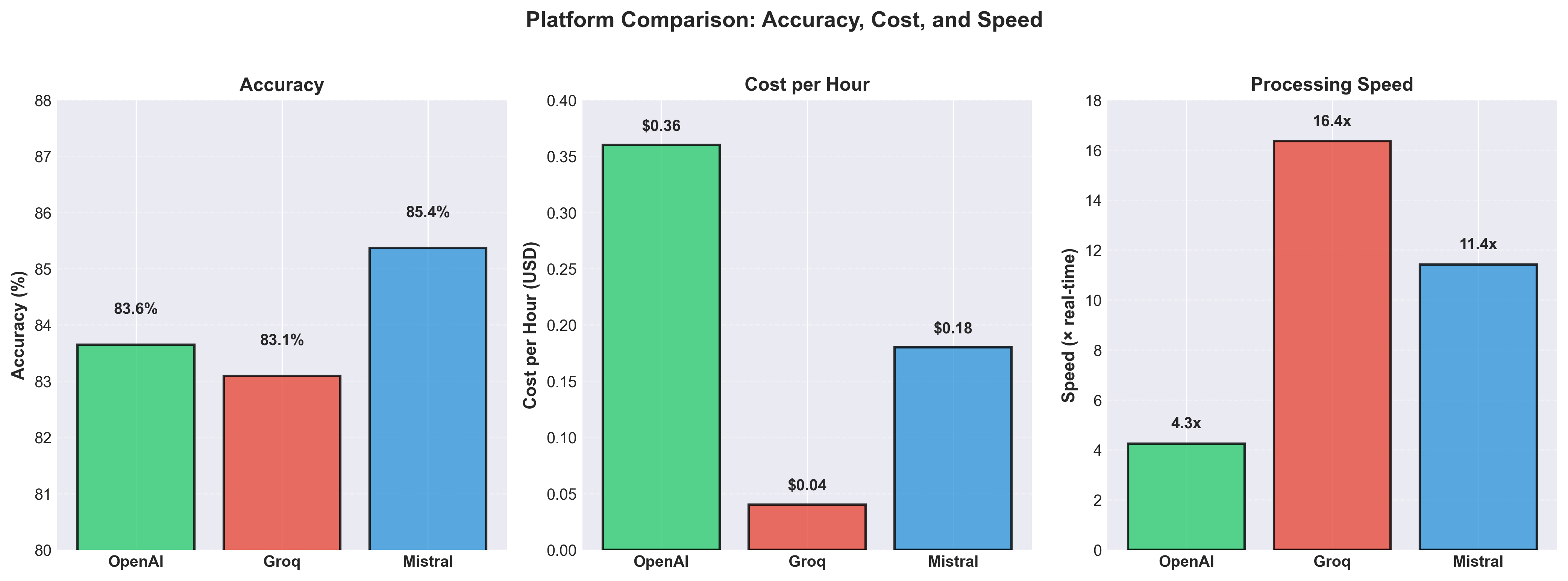

The three panels below show accuracy, cost, and speed side by side:

Groq costs $0.00067 per minute. OpenAI costs $0.00600 per minute. That's 9x cheaper. Groq also transcribes at 16.4x real-time speed versus OpenAI's 4.3x. For a one-minute audio file, Groq takes 3.7 seconds. OpenAI takes 14 seconds.

Mistral had the best accuracy at 14.63% word error rate. Groq was close at 16.91%. OpenAI was in the middle at 16.35%. The differences were small enough that for most use cases, they'd perform similarly.

The winner depends on what you need. If you're transcribing hours of audio and want the lowest cost, use Groq. If you need the absolute best accuracy and have features like speaker diarization, use Mistral. If you want the platform every agent knows how to use without looking up documentation, use OpenAI.

Cost Optimization Experiments

I found articles suggesting you could save money on transcription by speeding up the audio or removing silence before sending it to the API. The logic makes sense: if APIs charge per minute of audio and you can compress a 10-minute file down to 5 minutes, you'd save 50% on costs.

I tested three modifications:

- Speed up audio 2x using ffmpeg

- Remove silence from audio using ffmpeg

- Combine both modifications

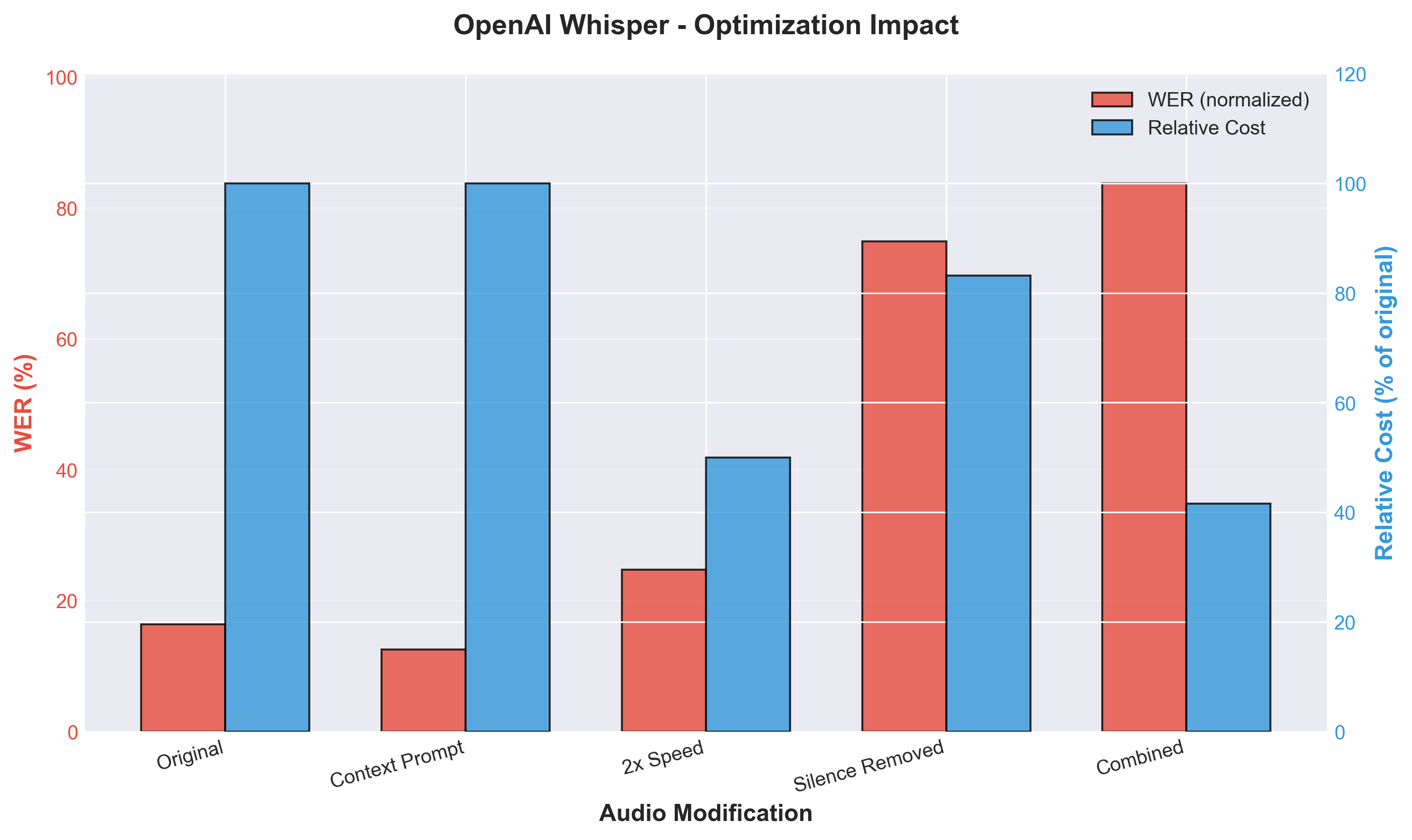

Here's how each modification affected OpenAI's accuracy and cost:

Speeding up audio 2x saved 50% on cost but increased word error rate by 51%. The transcription went from 16.35% WER to 24.72% WER. Almost one quarter of words were wrong.

Removing silence was worse. It only saved 16.8% on cost (because most audio files don't have that much silence) but increased error rate by 358%. The WER jumped to 74.88%. Three quarters of words were wrong.

| Platform | Modification | WER | Cost Savings | Worth It? |

|---|---|---|---|---|

| OpenAI | Original | 16.35% | 0% | baseline |

| OpenAI | 2x Speed | 24.72% | 50% | ✗ No |

| OpenAI | Silence Removed | 74.88% | 16.8% | ✗ No |

| OpenAI | Combined | 83.75% | 58.4% | ✗ No |

| Groq | Original | 16.91% | 0% | baseline |

| Groq | 2x Speed | 24.66% | 50% | ✗ No |

| Groq | Silence Removed | 71.85% | 16.8% | ✗ No |

| Groq | Combined | 110.94% | 58.4% | ✗ No |

| Mistral | Original | 14.63% | 0% | baseline |

| Mistral | 2x Speed | 21.27% | 50% | ✗ No |

| Mistral | Silence Removed | 92.63% | 16.8% | ✗ No |

| Mistral | Combined | 135.86% | 58.4% | ✗ No |

The pattern held across all three platforms. None of the optimizations were worth it. The accuracy loss exceeded any cost savings.

These APIs are already trained on normal-speed audio. When you speed up the audio, you're giving them input they weren't designed to handle. The silence removal breaks sentence boundaries and natural speech patterns. The models can't recover from that.

If you need to save money on transcription, use Groq. It's 9x cheaper than OpenAI with the same accuracy. Don't try to optimize the audio.

Feature Tests

Beyond basic transcription, each platform markets different features. Speaker diarization, context prompting, word-level timestamps. I tested whether these features actually worked.

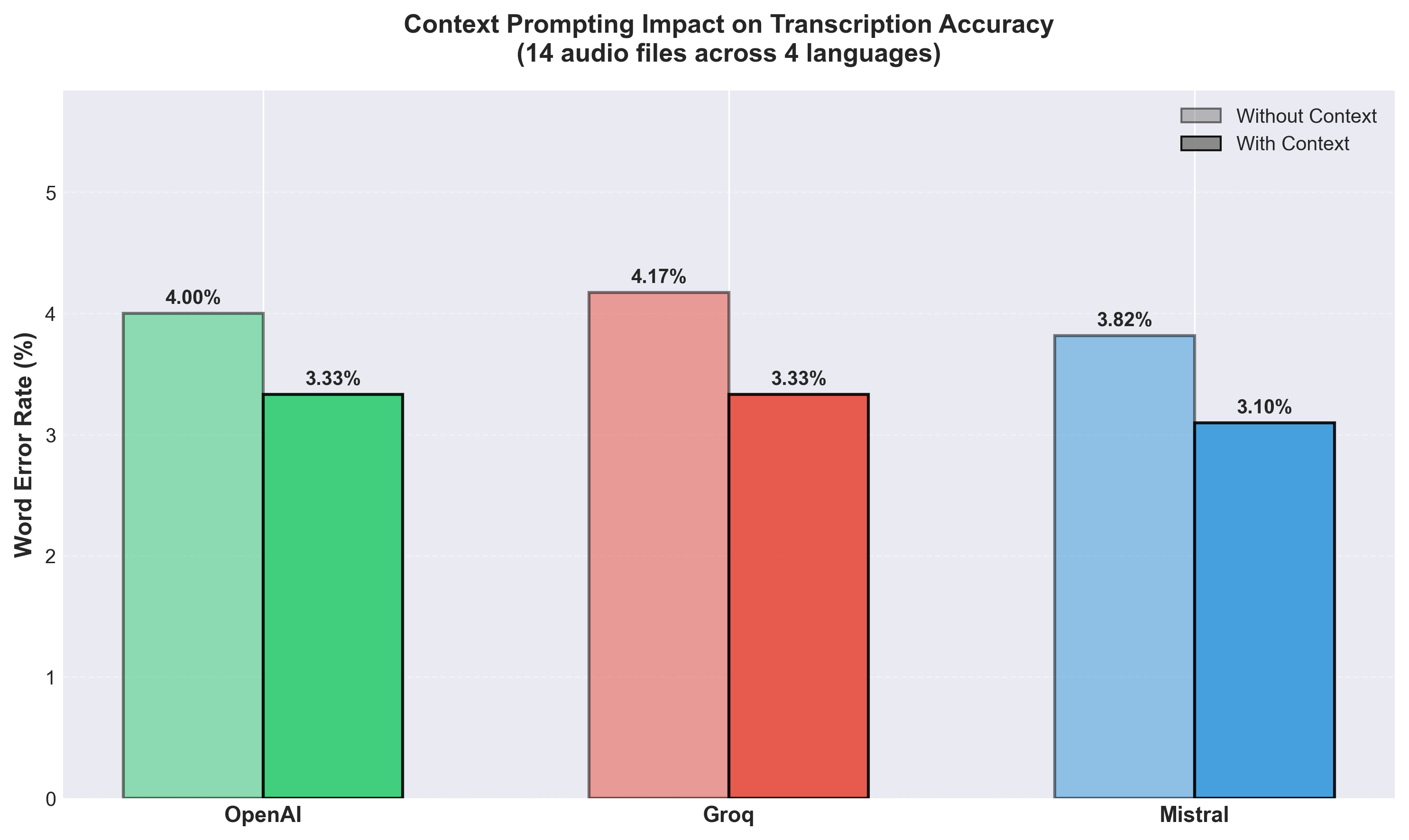

Context Prompting

All three platforms let you provide context to improve transcription accuracy. OpenAI and Groq accept a free-text prompt. Mistral uses "context bias," a list of individual words to guide the model.

Context mostly doesn't matter. For general speech, it made no difference on any platform. The gains showed up on proper nouns. Without context, OpenAI transcribed "Nyiragongo" (a volcano in the Congo) as "Jiragongo." Groq turned "Springboks" into "spring box." Adding a prompt with the correct terms fixed both.

The aggregate WER improvement across 14 audio files was small for all three platforms:

Speaker Diarization

Mistral's marketing emphasized speaker diarization as a built-in feature. OpenAI has a separate diarization model. Groq doesn't mention it at all.

I transcribed a 60-second debate clip with two speakers.

Mistral identified both speakers correctly:

Segment 1 (0.1s-5.9s): speaker_1 - "So that leads naturally to, Larry..."

Segment 4 (23.1s-31.7s): speaker_2 - "I just, I'll answer that in one second..."

Segment 5 (32.1s-32.4s): speaker_1 - "Sure."

OpenAI's diarization model detected 8 segments but labeled every speaker as "Unknown." It found the speaker changes but couldn't distinguish who was speaking.

Groq has no native diarization support.

The video below shows the debate clip with Mistral's subtitles. Watch how the speaker labels switch between "Speaker 1" and "Speaker 2" as different people talk:

This is where the 0% visibility platform showed its advantage. Mistral built features that the more visible platforms either implemented poorly (OpenAI) or skipped entirely (Groq).

Word-Level Timestamps

All three platforms support timestamps for subtitle generation. OpenAI and Groq provide true word-level timestamps. Mistral returns short segments that function similarly.

I generated subtitles for a Python tutorial video using each platform. All three worked. The video below shows how Groq's word-level timestamps appear in sync with the speaker:

Which Platform Should You Use?

Agent discoverability doesn't predict quality. The most visible platform (OpenAI at 100%) is middle-of-the-pack on price and speed. The invisible platform (Mistral at 0%) has the best accuracy and unique features. The low-visibility platform (Groq at 33%) offers the best value.

Here's what I found:

Groq wins on cost and speed. At $0.00067 per minute, it's 9x cheaper than OpenAI and 4.5x cheaper than Mistral. It transcribes at 16.4x real-time speed. For high-volume transcription, Groq saves the most money.

Mistral wins on accuracy and features. It has 14.63% word error rate versus 16.35% for OpenAI and 16.91% for Groq. It's the only platform with working speaker diarization. If you need to identify who said what in a conversation, Mistral is your only choice.

OpenAI wins on agent experience. Every agent knows the OpenAI Whisper API without looking up documentation. It appears in 100% of queries. If you want code that works immediately without web search, use OpenAI.

The cost optimization experiments failed. Speeding up audio 2x saved 50% on cost but increased errors by 51%. Removing silence saved 16.8% on cost but increased errors by 358%. Don't optimize the audio. Just use Groq if you need cheap transcription.

The agent experience gaps are real. Platforms with low visibility require documentation lookup. Mistral needed web search to generate working code. Without documentation, the agent used the wrong API endpoint, wrong model, and wrong file format. OpenAI and Groq worked immediately from training data alone.

Companies building transcription APIs haven't optimized for agents. No CLI tools for signup. No MCP servers for authentication. Manual browser flows for everyone. One platform could build agent-friendly onboarding and differentiate themselves immediately.

If you're building a developer platform, agent experience matters more than you think. Developers are asking agents for recommendations. If the agent doesn't know about you, you don't exist.