Agent Experience for Browser Automation Platforms: Browserless vs Browserbase vs Anchor

Browser automation platforms let you run headless browsers in the cloud for web scraping, testing, and building tools that interact with websites at scale.

Many developers will have their first experience with these platforms through an AI coding agent. They'll ask "what should I use for browser automation?" and work with whatever gets recommended. That question tests agent experience (AX), or what it is like using an agent to discover, understand, and use these products with minimal friction.

I tested three platforms with very different visibility to agents. Browserless appeared in 75% of AI recommendations, Browserbase in 67%, and Anchor in 8%. I measured connection speeds, tested CAPTCHA solving and stealth mode, and ran real scraping tasks. The goal was to see if these visibility differences translated to actual differences in performance and agent-assisted development experience.

I also attempted something more challenging. I used vision-based automation to solve interactive visual puzzles on neal.fun. The results show just how far browser automation still has to go when dealing with tasks that require visual understanding.

An AI agent attempting to solve visual puzzles on neal.fun's "Not A Robot" game. It made 14 attempts over 5 minutes but got stuck on Level 2.

How I Found These Platforms

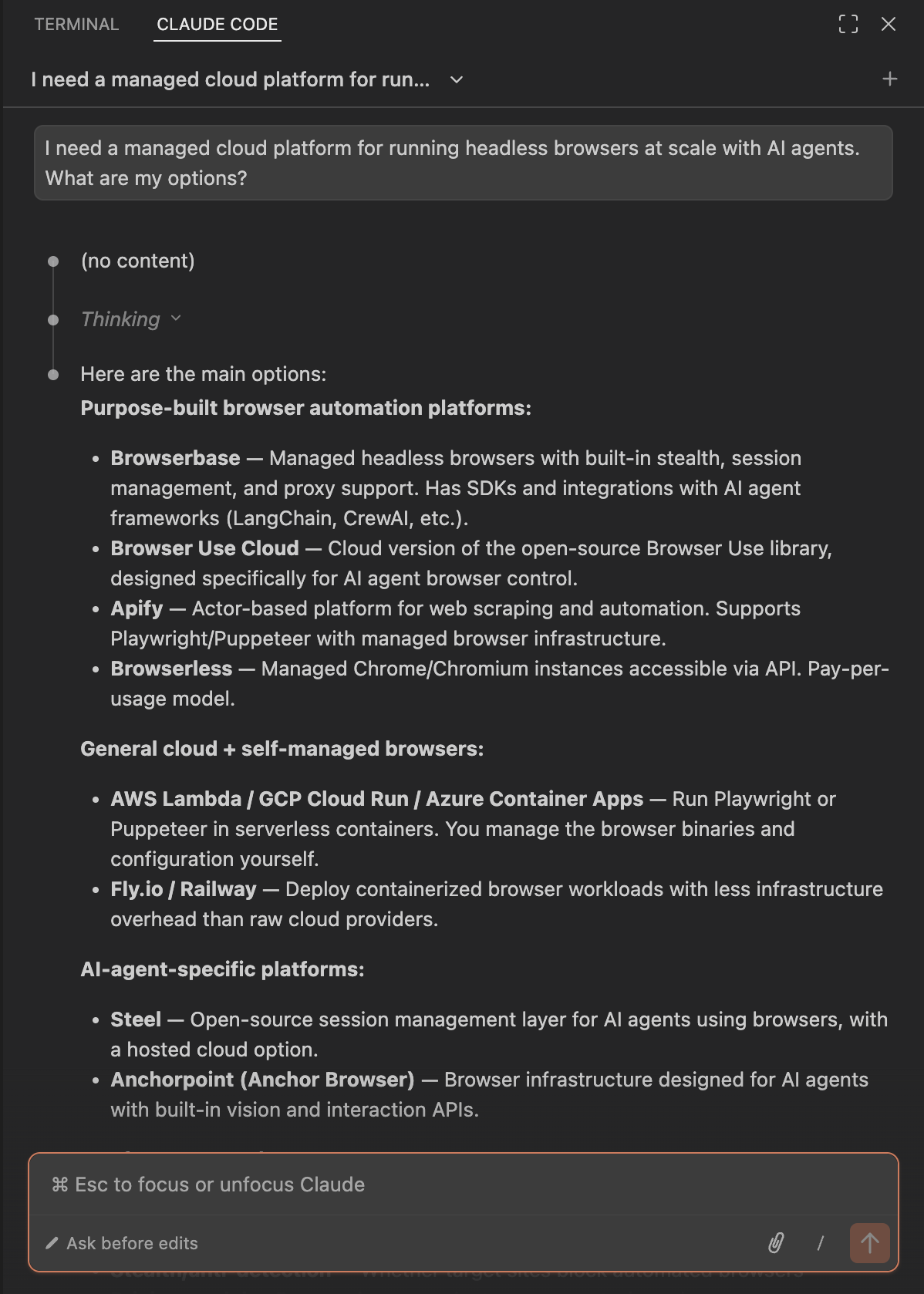

Before testing code or features, I wanted to see which platforms an AI agent would actually recommend. This matters because many developers now start projects by asking Claude Code or Cursor "what should I use for X?" If your platform doesn't show up in those responses, you're invisible.

I asked Claude Code variations of the same question: "What platforms can I use to run headless browsers at scale?" "Best platform for browser automation?" "I need to automate web scraping—what should I use?"

An AI agent's knowledge about a product comes from its training data—documentation, forum discussions, blog posts, and code examples that existed before its knowledge cutoff. High visibility might mean a platform is more established with extensive documentation and a larger community. But it could also mean newer or niche products get overlooked, even if they're technically superior.

I chose three platforms that represent different visibility levels: Browserless appeared in 9 out of 12 responses (75%), Browserbase in 8 out of 12 (67%), and Anchor in just 1 out of 12 (8%). Testing across this range lets me see whether visibility actually predicts quality or just reflects training data bias.

Can Agents Find Pricing Information?

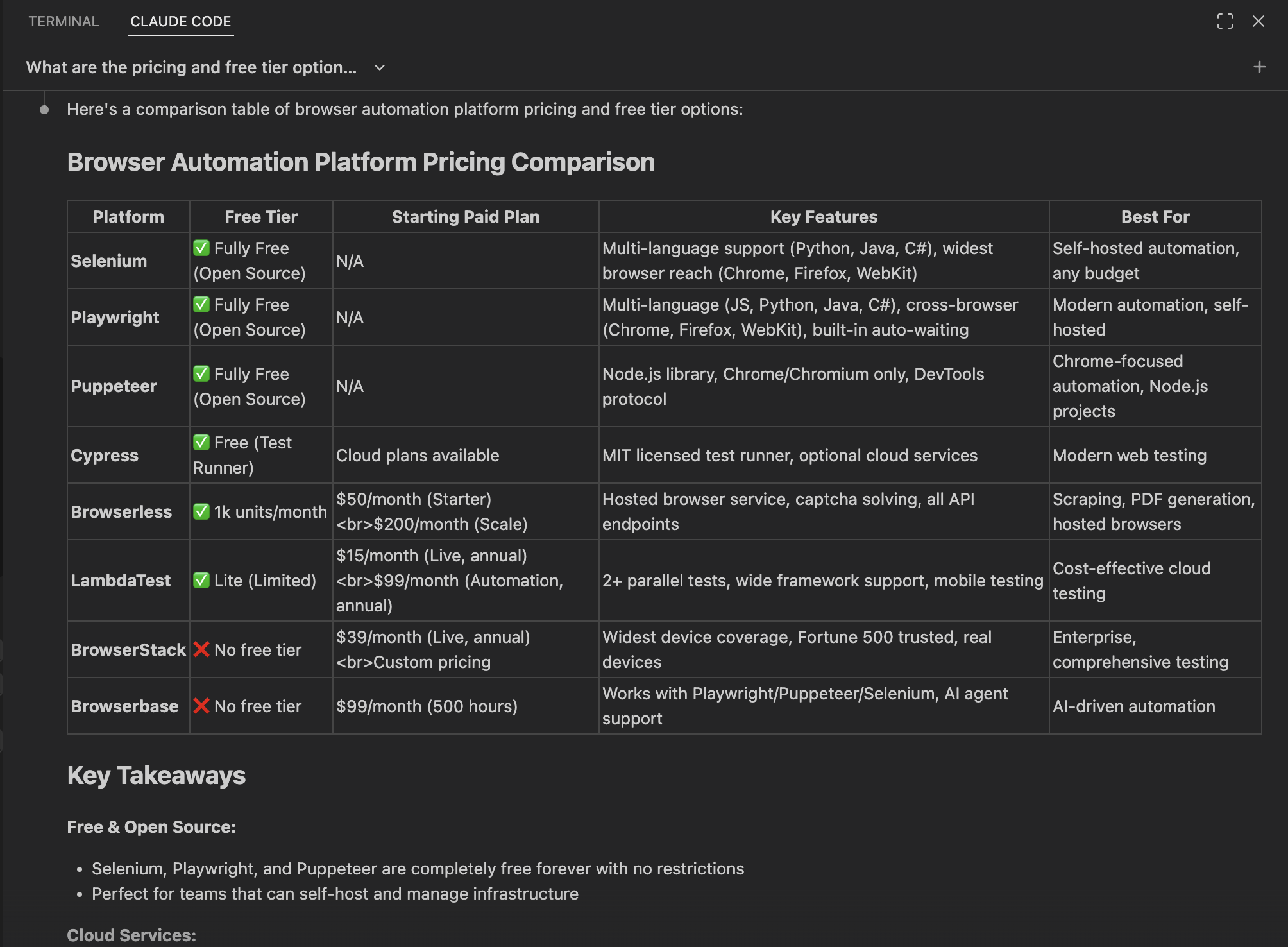

Pricing can get complicated with usage-based models and concurrent session limits. When you're evaluating platforms, you want a quick comparison of what the free tier offers and what paid plans cost. I asked Claude Code a generic question to see what it would surface:

"What are the pricing and free tier options for browser automation platforms?"

The response included Browserless (1k units/month free, $50/month starter) but listed Browserbase as having "no free tier" starting at $99/month. Anchor didn't appear at all.

Since I knew Browserbase does have a free tier, I tried again this time being more explicit:

"What are the specific pricing and free tier details for Browserless, Browserbase, and Anchor browser automation platforms?"

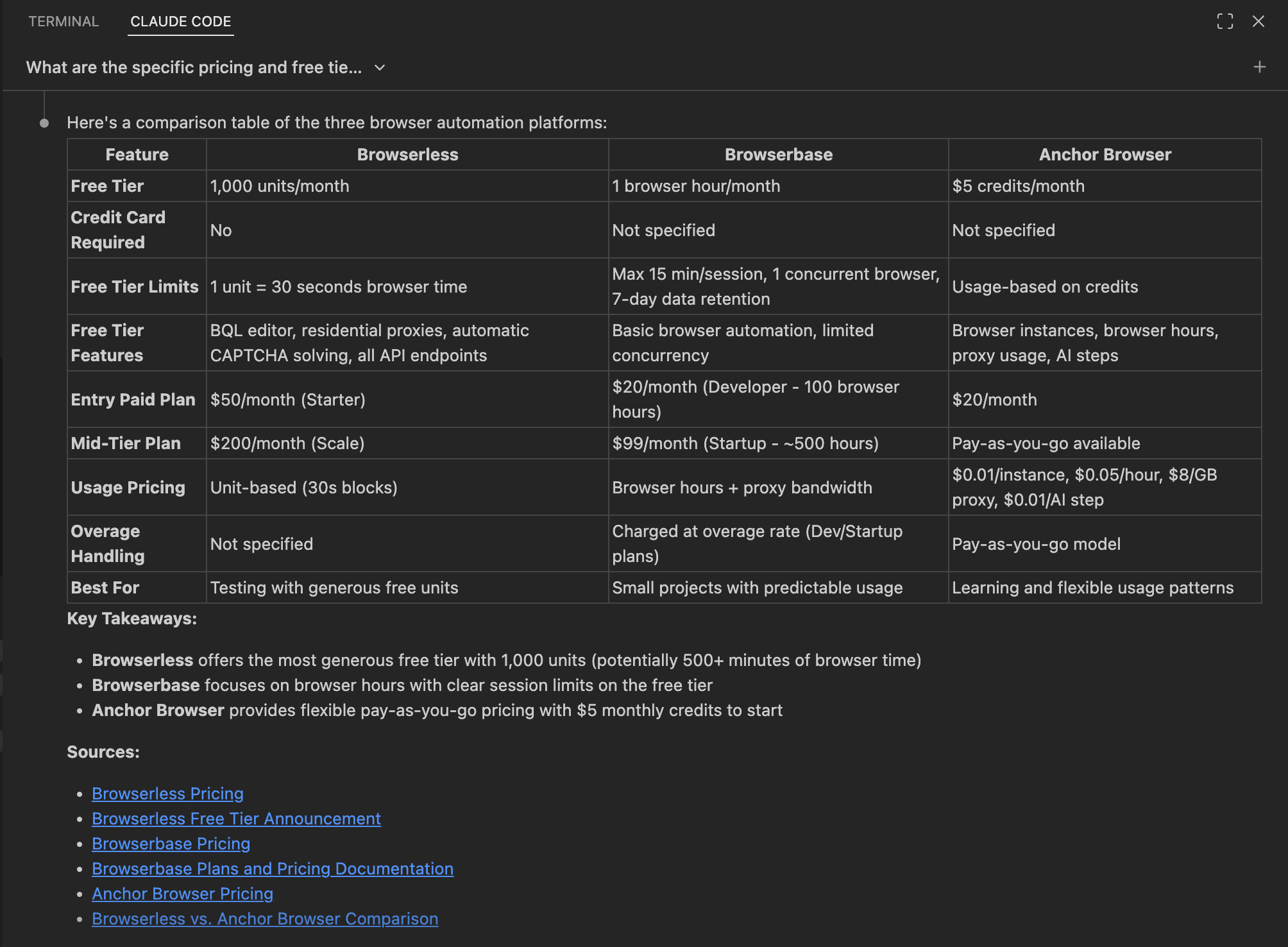

This time the agent found information for all three platforms. Browserbase showed up with a free tier (1 browser hour/month) and correct Developer pricing ($20/month). Anchor appeared with its $5 free credits and $20/month starter plan.

I checked the actual pricing pages (Browserbase, Anchor, Browserless):

Browserbase had the biggest difference between the two responses. The generic search listed it as having no free tier at $99/month. The explicit search found it correctly at 1 browser hour/month free with a $20/month Developer plan. The actual page confirms the explicit search was right.

Browserless does offer 1k units/month free, but the starter plan is $140/month, not the $50 both queries reported. Anchor's pricing matched what the explicit search found: $5 in free credits, starter from $20/month.

Generic searches miss platforms entirely or get pricing wrong. Browserbase's free tier was invisible in the first search. Anchor didn't appear at all. Browserless showed up first but had incorrect pricing. If you don't already know which platforms exist, the agent can't help you discover them accurately.

Getting Started: Sign-Up and Setup

The next step was actually signing up. I asked the agent to help me create accounts:

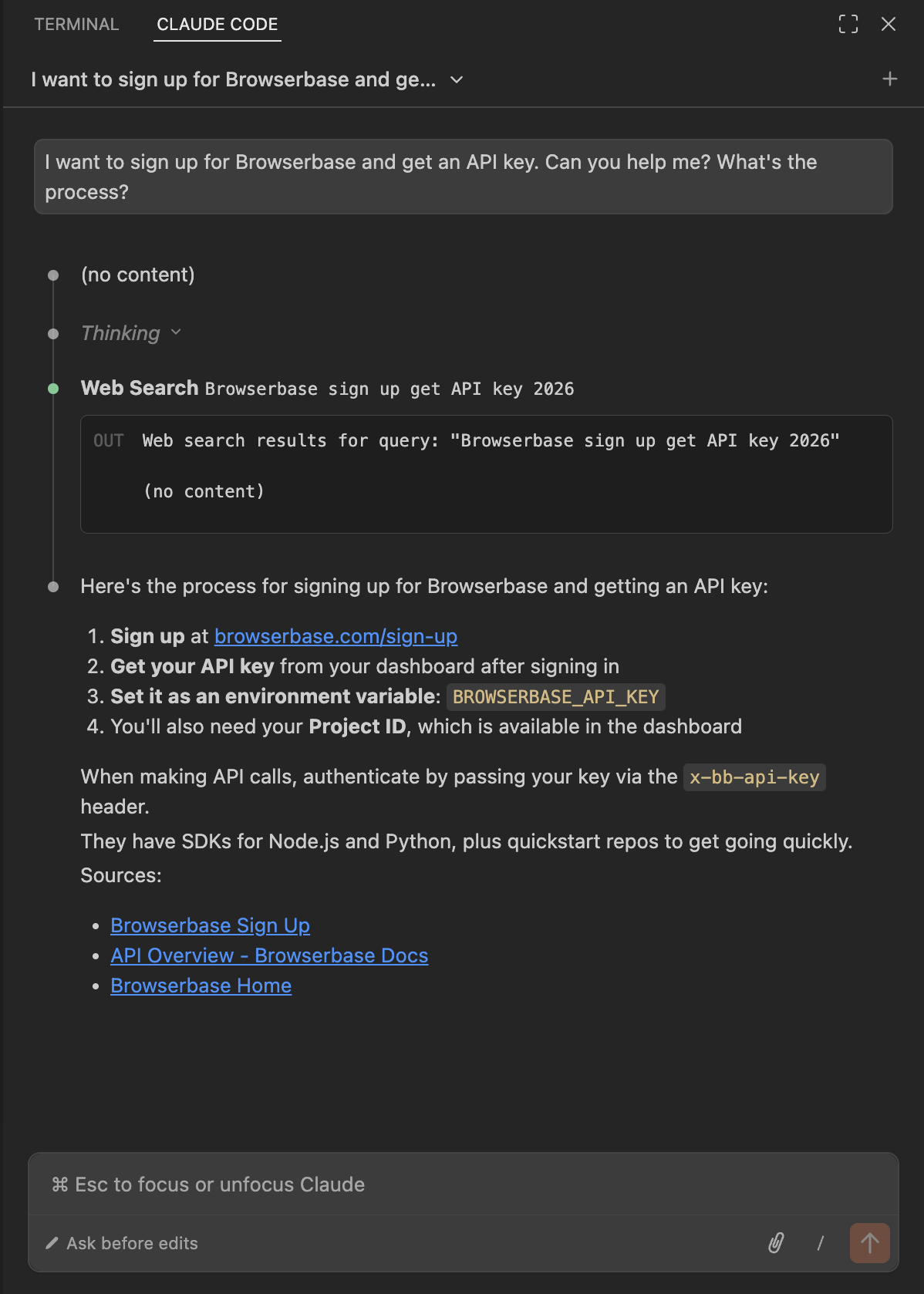

"I want to sign up for Browserbase and get an API key. Can you help me? What's the process?"

The agent gave clear steps: sign up at browserbase.com/sign-up, find the API key in the dashboard, set it as an environment variable, and noted I'd need both an API key and Project ID. But then it stopped. It couldn't complete the sign-up itself.

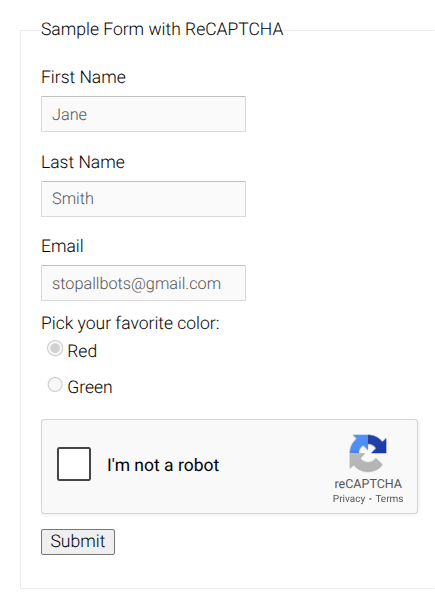

This is where I hit the first practical limitation. Browser automation platforms need email verification or CAPTCHA challenges that agents can't handle. It's like needing glasses to look for your glasses. The tool that would let an agent complete this task is exactly what I'm trying to sign up for.

A CLI tool or MCP server for account creation could solve this, but none of the platforms offered one. I had to create the account manually, then come back to the agent with credentials to continue.

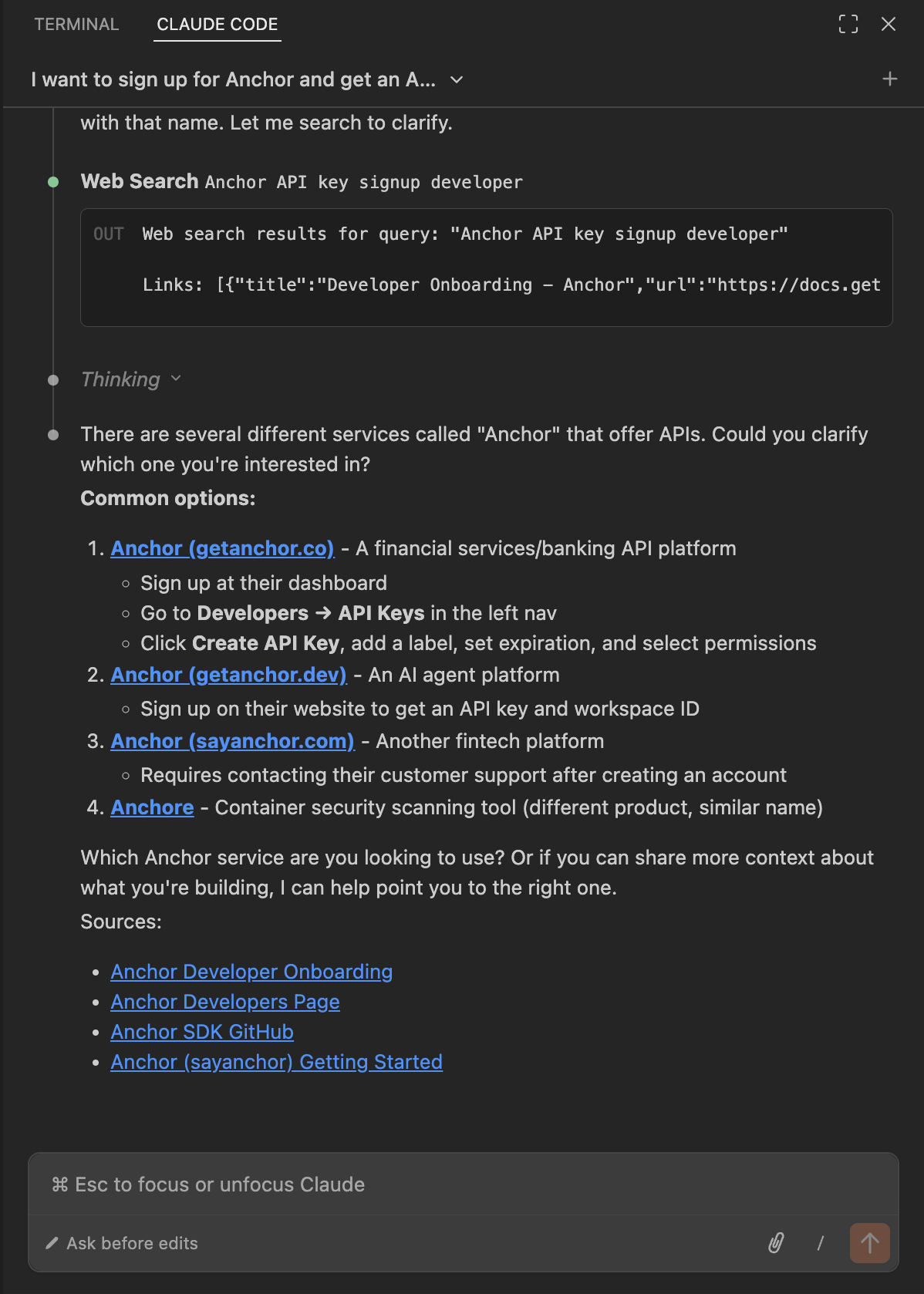

I tried the same approach with Anchor to test its visibility:

"I want to sign up for Anchor and get an API key. Can you help me? What's the process?"

The agent found several different services called "Anchor" - a financial services API, an AI agent platform, another fintech platform, and a container security tool. It asked me to clarify which one I meant.

The visibility difference showed up here too. Browserbase had clear enough documentation that the agent could guide me to the right sign-up page and explain what credentials I'd need. Anchor's lower visibility meant the agent couldn't distinguish it from other services with the same name.

Hello World: Taking a Screenshot

With API keys in hand, the next test is writing actual code: take a screenshot of example.com and save it locally. I used the same prompt for all three platforms:

"I have an [platform] API key stored in

.env. Can you help me write a simple test script that takes a screenshot of example.com? Save the screenshot to the same folder."

Browserless:

⏺ Read .env file

⏺ Write screenshot_test.py (74 lines)

⏺ Bash: python3 screenshot_test.py

✓ Screenshot saved to example_screenshot.png

File size: 17,585 bytes

The agent knew the API structure without looking anything up:

import requests

api_key = os.getenv('BROWSERLESS_API_KEY')

url = f"https://chrome.browserless.io/screenshot?token={api_key}"

response = requests.post(url, json={

"url": "https://example.com",

"options": {"fullPage": True, "type": "png"}

})

with open('screenshot.png', 'wb') as f:

f.write(response.content)

Browserbase:

⏺ Read .env file

⏺ Write screenshot_test.py (48 lines) with Playwright

⏺ Write requirements.txt

⏺ Bash: pip install -r requirements.txt

⏺ Bash: playwright install chromium

⏺ Bash: python screenshot_test.py

✓ Screenshot saved to example_com_screenshot.png

The agent knew Browserbase uses Playwright and Chrome DevTools Protocol without looking up documentation.

Anchor failed on the first try:

⏺ Read .env file

⏺ Write test_screenshot.py (67 lines)

⏺ Bash: python test_screenshot.py

✗ Error: 404 Client Error for url: https://api.anchor.dev/v1/browser/screenshot

The agent guessed wrong on three things: the domain (anchor.dev instead of anchorbrowser.io), the authentication header format, and the request payload structure. It searched for documentation and corrected the errors:

⏺ Web Search: "Anchor browser API screenshot endpoint documentation"

⏺ Fetch: https://docs.anchorbrowser.io/api-reference/tools/screenshot-webpage

⏺ Update test_screenshot.py (corrections)

⏺ Bash: python test_screenshot.py

✓ Screenshot saved to example_com_screenshot.png

After corrections, the code looked similar to Browserless:

import requests

headers = {"anchor-api-key": ANCHOR_API_KEY, "Content-Type": "application/json"}

response = requests.post("https://api.anchorbrowser.io/v1/tools/screenshot",

headers=headers,

json={"url": "https://example.com", "width": 1920, "height": 1080})

with open('screenshot.png', 'wb') as f:

f.write(response.content)

All three platforms completed the screenshot test, but the experience was different. For Browserless and Browserbase, the agent had their API structures memorized. It generated working code without hesitation. For Anchor, the agent made educated guesses that turned out wrong, then recovered by searching documentation and fixing the errors.

This hello world test reveals something about agent experience that doesn't show up in traditional developer documentation. There's friction between "I want to try this" and "I have working code." When an agent knows the API structure, that friction is nearly zero. When it doesn't, the friction depends on how well it can find and use documentation. Anchor's documentation was good enough that the agent corrected itself, but there was still a debugging cycle that didn't exist for the other platforms.

Speed Benchmarks

I wanted a standardized way to measure platform performance. Steel published browserbench, an open-source benchmark that measures connection speed across browser automation platforms. Their results show Browserbase averaging 2,967ms per session, Anchor at 8,001ms, Kernel at 7,034ms, and Steel itself at 3,109ms across 5,000 runs per platform.

Browserbench tests four stages: session creation (API call to start a browser), CDP connection (connecting via Chrome DevTools Protocol), page navigation (loading example.com), and session cleanup (terminating the browser). The full test suite runs 5,000 sessions per platform.

I couldn't run 5,000 sessions on free tiers. Most platforms limit you to 1,000 requests per month or cap concurrent sessions at 1. Running the full benchmark would burn through monthly quotas in minutes.

Testing Interactive Tasks with neal.fun

Instead of measuring raw connection speed, I wanted to test whether agents could handle complex interactive tasks. I found neal.fun, a collection of web games and challenges perfect for testing browser automation.

I wanted to test whether agents could solve visual puzzles from neal.fun's "Not A Robot" game, which requires interpreting images and clicking correct elements.

I tried writing agent code with Playwright and OpenAI's Vision API. The idea was to screenshot the puzzle, send it to GPT-4 Vision for analysis, parse the response for a CSS selector, then execute the click. The vision-to-action translation proved too difficult. GPT-4 Vision could describe what it saw ("click the stop sign"), but connecting that description to precise Playwright selectors or coordinates consistently failed.

I ended up using Stagehand with Browserbase. Stagehand is Browserbase's vision-native automation library that handles the screenshot → vision analysis → action execution pipeline internally:

import { Stagehand } from "@browserbasehq/stagehand";

const stagehand = new Stagehand({

env: "BROWSERBASE",

apiKey: process.env.BROWSERBASE_API_KEY,

projectId: process.env.BROWSERBASE_PROJECT_ID

});

await stagehand.init();

const page = stagehand.context.pages()[0];

await page.goto('https://neal.fun/not-a-robot/');

const maxAttempts = 20;

for (let attempt = 1; attempt <= maxAttempts; attempt++) {

// Stagehand's act() handles: screenshot → vision → execution

await stagehand.act({

action: "Solve the current puzzle by clicking the correct element"

});

await page.waitForTimeout(2000);

// Check progress with vision-based observation

const observation = await stagehand.observe({

instruction: "What level number is displayed?"

});

}

Here's the session replay showing the agent trying to brute force Level 2:

The agent got stuck on Level 2. Even with Stagehand abstracting the vision-to-action pipeline, the puzzles require interpreting ambiguous visual instructions and pixel-perfect interactions that current vision models struggle with.

For reference, I got stuck trying to draw a 94% perfect circle on Level 17.

Building a Free Tier Benchmark

The neil.fun tests weren't insightful for comparing platforms. The tasks were too complex, and failures came from agent limitations, not platform differences. I needed something simpler that measured what platforms actually optimize: connection overhead and basic automation speed.

I forked Steel's browserbench at github.com/ritza-co/ritza-browser-bench and asked the agent to adapt it for free tier testing. The agent added Browserless (which Steel's original didn't include), reduced the run count from 5,000 to 10 measured runs with 3 warmup runs, and simplified rate limiting logic.

Each provider implements a simple interface with create() and release() methods. Here's the Browserless implementation, which shows why its session creation shows 0ms overhead:

// Browserless provider - no session creation overhead

async create(): Promise<ProviderSession> {

const apiKey = this.getApiKey();

const cdpUrl = `wss://${apiKey}@chrome.browserless.io`;

const id = `browserless-${Date.now()}-${Math.random().toString(36).substring(7)}`;

return { id, cdpUrl };

}

async release(id: string): Promise<void> {

// No explicit release - sessions close when CDP connection drops

return Promise.resolve();

}

There's no API call during session creation—just constructing a WebSocket URL. Browserless closes sessions automatically when the CDP connection drops. This is different from Browserbase and Anchor, which both make API calls to create and terminate sessions.

Results from 10 measured runs per platform:

| Platform | Total Time | Session Creation | CDP Connection | Success Rate | Variance |

|---|---|---|---|---|---|

| Browserless | 4,264ms | 0ms | 3,085ms | 10/10 | 18% |

| Browserbase | 11,886ms | 5,838ms | 2,995ms | 10/10 | 21% |

| Anchor | 13,121ms | 5,801ms | 2,689ms | 10/10 | 38% |

Browserless completed the full cycle in 4.3 seconds. Session creation was instant, CDP connection took 3 seconds, and performance was consistent across all runs.

Browserbase averaged 11.9 seconds, but that average hides variance. Eight runs completed in 6-7 seconds. Two runs spiked to 26-27 seconds during session creation. Free tier rate limiting or resource contention likely caused these spikes.

Anchor averaged 13.1 seconds with 5-6 seconds on session creation and 2-3 seconds on cleanup. Performance was slower than the other platforms on every run. The 38% variance was higher than Browserless (18%) or Browserbase (21%).

These numbers show connection speed. The benchmark doesn't test data extraction, authentication, CAPTCHA handling, or any real automation tasks. For those, I needed different tests.

Real-World Testing

Connection speed matters, but real projects encounter CAPTCHAs, need to debug failed selectors, run tasks in parallel, and avoid bot detection. I wanted to test whether agents could find documentation for these features and generate working code.

I added six test scenarios to the browserbench fork at github.com/ritza-co/ritza-browser-bench. Each test uses the same prompt for all three platforms to compare agent experience:

- Product search - Extract structured data from an e-commerce site

- Multi-page navigation - Click links, navigate between pages

- CAPTCHA handling - Solve reCAPTCHA

- Error recovery - Debugging tools when things break

- Parallel execution - Running multiple browsers simultaneously

- Bot detection - Stealth mode and anti-detection

You can run these tests yourself on the free tiers to verify the results.

Test 1: Product Search and Data Extraction

I asked the agent to scrape book titles and prices from books.toscrape.com:

"Write a script to extract the first 10 book titles and prices from books.toscrape.com using [platform]."

For all three platforms, the agent generated similar Playwright code without needing to look anything up:

# Navigate to the page

page.goto('http://books.toscrape.com/')

# Extract first 10 products

products = page.locator('article.product_pod').all()[:10]

results = []

for product in products:

title = product.locator('h3 a').get_attribute('title')

price = product.locator('.price_color').inner_text()

results.append({"title": title, "price": price})

All three platforms extracted the data successfully, but performance varied:

| Platform | Duration | Products Extracted |

|---|---|---|

| Browserless | 22.8s | 10/10 |

| Browserbase | 15.5s | 10/10 |

| Anchor | 70.6s | 10/10 |

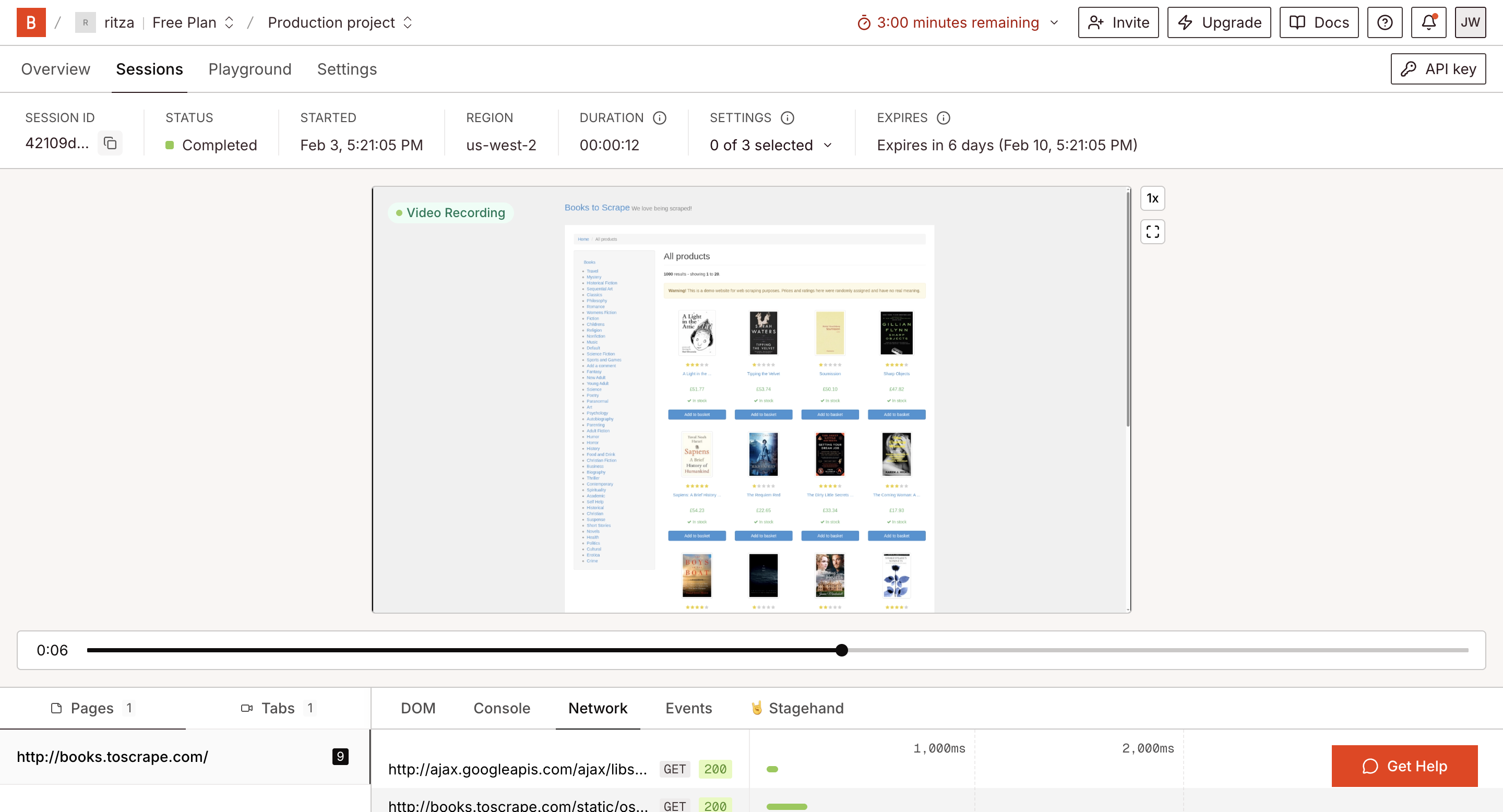

Browserbase's session replay captured the full execution:

Anchor took 70.6 seconds to load one page and extract 10 data points. Browserbase completed the same task in 15.5 seconds.

Test 2: Multi-Page Navigation

The next test involved clicking through to a detail page:

"Navigate from the books catalog to a product detail page, click the first book, and extract the title, price, and description."

The agent generated code for multi-step navigation:

# Navigate to catalog

page.goto('http://books.toscrape.com/catalogue/category/books_1/index.html')

# Click first book

page.locator('article.product_pod h3 a').first.click()

page.wait_for_load_state('networkidle')

# Extract details from product page

title = page.locator('h1').inner_text()

price = page.locator('.price_color').inner_text()

description = page.locator('#product_description + p').inner_text()

All three platforms handled the navigation without errors:

| Platform | Duration |

|---|---|

| Browserless | 40.5s |

| Browserbase | 13.9s |

| Anchor | 32.1s |

The video shows the browser clicking through from the catalog to the product detail page:

Test 3: CAPTCHA Solving

"Navigate to Google's reCAPTCHA demo page and solve the CAPTCHA."

The agent knew about Browserless's CAPTCHA solving immediately. It generated code using CDP events to listen for CAPTCHA detection and solving:

# Connect with CAPTCHA solving enabled

endpoint = f"wss://production-sfo.browserless.io/stealth?token={TOKEN}&solveCaptchas=true"

browser = await playwright.chromium.connect_over_cdp(endpoint)

# Set up CDP event listeners

cdp = await page.context.new_cdp_session(page)

cdp.on("Browserless.captchaFound", on_captcha_found)

cdp.on("Browserless.captchaAutoSolved", on_captcha_auto_solved)

# Navigate and wait for solve

page.goto("https://www.google.com/recaptcha/api2/demo")

await captcha_events['solved'].wait()

# Submit the form

await page.click("#recaptcha-demo-submit")

Only Browserless solved the CAPTCHA automatically:

| Platform | CAPTCHA Solved | Duration |

|---|---|---|

| Browserless | ✅ Yes | 39.2s |

| Browserbase | ❌ No | 41.8s |

| Anchor | ❌ No | 51.6s |

Browserless detected the CAPTCHA, solved it in 17 seconds, and generated a valid token:

The agent had to add code to submit the form after the solve. The platform handles detection and token generation, not form submission.

Browserbase doesn't include CAPTCHA solving on the free tier.

Anchor's documentation advertises a "vision-based solver" that requires a proxy. I enabled both and waited. The CAPTCHA remained unsolved. Anchor's documentation shows CAPTCHA solving as a feature, but it didn't work on the demo page.

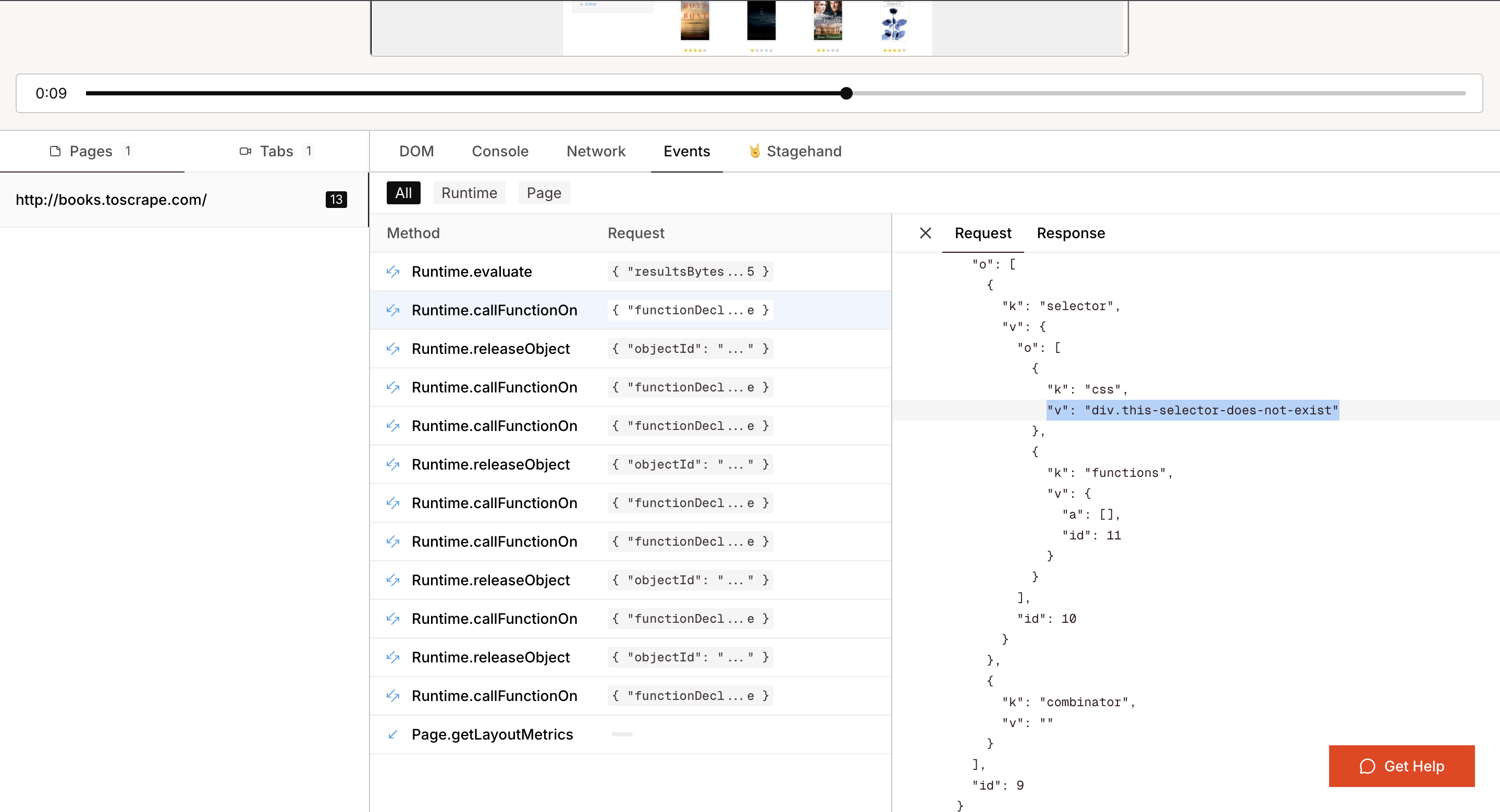

Test 4: Debugging Tools

"Navigate to books.toscrape.com and try to click a selector that doesn't exist. Capture what happens."

The agent generated standard error-handling code for all three platforms:

try:

page.locator('div.this-selector-does-not-exist').click(timeout=5000)

except Exception as e:

print(f"Error: {type(e).__name__}")

page.screenshot(path='error_state.png')

html_content = page.content()

All three platforms caught the error, but debugging tools varied:

| Platform | Duration | Session Replay Available |

|---|---|---|

| Browserless | 20.5s | ❌ No (Enterprise only) |

| Browserbase | 19.8s | ✅ Yes (free tier) |

| Anchor | 30.0s | ❌ Unclear |

Browserbase's logs panel captured every browser event during the error:

Browserbase recorded the session automatically. I could watch the full video, scrub through the timeline, and inspect the DOM at each error point. The Logs API returned 129 entries showing every browser event, network request, and console message.

Browserless limits session replay to enterprise plans. On the free tier, I had screenshots, HTML dumps, and console capture. Standard Playwright debugging.

Anchor showed a Live View URL during script execution, but no post-session replay.

Test 5: Parallel Execution

"Scrape three product categories (Travel, Mystery, Historical Fiction) simultaneously from books.toscrape.com."

The agent generated async code to launch three browsers in parallel:

import asyncio

async def scrape_category(category_name, category_url):

# Create browser session

# Navigate to category

# Extract products

return results

categories = [

("Travel", "books_2"),

("Mystery", "books_3"),

("Historical Fiction", "books_4")

]

results = await asyncio.gather(*[

scrape_category(name, url) for name, url in categories

])

The code worked, but the results revealed different concurrency limits:

| Platform | Duration | Categories Completed | Concurrency Limit |

|---|---|---|---|

| Browserless | 10.4s | 1/3 | 10 (advertised) |

| Browserbase | 55.3s | 3/3 | 1 (free tier) |

| Anchor | 60.8s | 3/3 | 5 (free tier) |

Browserless advertises 10 concurrent sessions on the free tier, but two of the three parallel sessions hit 429 Too Many Requests errors.

Browserbase ran all three categories sequentially. The free tier allows 1 concurrent session.

Anchor completed all three categories in parallel without errors.

Test 6: Bot Detection and Stealth Mode

"Navigate to bot.sannysoft.com and capture what bot detection checks are triggered."

The agent knew about Browserless's stealth endpoint and generated code comparing both modes:

# Standard endpoint

standard = f"wss://production-sfo.browserless.io?token={TOKEN}"

# Stealth endpoint (free tier)

stealth = f"wss://production-sfo.browserless.io/stealth?token={TOKEN}"

The test checked whether bot detection indicators appeared on bot.sannysoft.com:

| Platform | Standard Mode | Stealth Mode | Duration |

|---|---|---|---|

| Browserless | ✅ Passed | ✅ Passed | 42.9s |

| Browserbase | ⚠️ Detected | N/A ($20/mo) | 22.0s |

| Anchor | ❌ Timeout | ❌ Timeout | 69.1s |

Browserless passed in both modes. Even without stealth enabled, the standard endpoint showed no detectable webdriver or headless indicators.

Browserbase's standard mode was detected. Webdriver properties exposed, headless indicators visible. The agent found documentation mentioning stealth mode, but it requires a paid plan.

Anchor timed out after 20 seconds. I tried both standard mode and "Cloudflare Web Bot Auth" mode from the documentation. Neither worked on this test page.

Results Summary

| Feature | Browserless | Browserbase | Anchor |

|---|---|---|---|

| Discoverability | 75% (9/12 queries) | 67% (8/12 queries) | 8% (1/12 queries) |

| Agent Knowledge | No docs needed | No docs needed | 3 corrections after 404 |

| Speed (avg) | 4.3s | 11.9s (2 spikes to 26s) | 13.1s |

| Product Search | 22.8s | 15.5s | 70.6s |

| Multi-Page Nav | 40.5s | 13.9s | 32.1s |

| CAPTCHA Solving | ✅ Free tier | ❌ Paid only | ❌ Documented, failed |

| Session Replay | ❌ Enterprise only | ✅ Free tier | ❌ Live view only |

| Parallel (3 sessions) | ⚠️ 1/3 completed | ⚠️ 3/3 sequential | ✅ 3/3 parallel |

| Stealth Mode | ✅ Free tier | ❌ Paid only | ❌ Timed out |

| Free Tier | 1k units/month, 10 concurrent | 1 hour/month, 1 concurrent | $5 credits, 5 concurrent |

| Entry Price | $140/month | $20/month | $20/month |

Browserless had zero session creation overhead in speed tests and solved CAPTCHAs on the free tier. The agent generated working code immediately without documentation lookups. Free tier rate limiting hit at 3 concurrent sessions despite advertising 10.

Browserbase provided session replay on the free tier with full video playback and DOM inspection at each error point. The agent knew Playwright patterns without looking up docs. Speed tests had two runs spike to 26-27 seconds.

Anchor completed all 3 parallel scraping tasks without rate limiting, while the other platforms either failed or ran sequentially. Performance was consistent across tests. The agent needed to search documentation and make three corrections after a 404 error. Two documented features (CAPTCHA solving, stealth mode) failed in testing.

Conclusion

I didn't know about Browserless or Anchor before starting this article. The agent did, and that shaped the entire experience.

The compounding advantage of visibility

Training data frequency creates a feedback loop. Platforms that agents recommend frequently get used more, which generates more blog posts and examples, which trains future models to recommend them even more. Low-visibility platforms face the opposite spiral. Agents don't recommend them, developers don't use them, no new training data gets created.

Discovery matters more for agents than for humans

The testing revealed a gap between developer experience and agent experience. Developer experience assumes humans will discover tools through conferences, colleagues, and targeted searches, then read documentation to learn them. Agent experience depends on whether the platform was visible enough during training to encode working knowledge into the model. A human can discover a niche tool and learn it. An agent without training data starts from zero every time.

This creates a different optimization problem for platform companies. Developer experience optimizes the journey after discovery: smooth onboarding, clear documentation, responsive support. Agent experience must optimize discovery first. Excellent documentation doesn't help if agents don't know you exist. The platforms with strong agent experience (Browserless, Browserbase) probably didn't optimize for agents intentionally. They just built good developer experiences and published enough examples that agents learned their APIs.

What comes next

As more developers start projects by asking agents "what should I use" instead of searching Google, this dynamic becomes infrastructure. Platforms with existing visibility have a compounding advantage. New platforms need to earn mentions in public forums, publish working code examples, and build agent knowledge over time. Training data doesn't update instantly. A blog post written today might not influence agent recommendations for months or years.

Agent experience is becoming as important as developer experience for developer tools. Platforms that recognize this early will adapt. Those that don't will wonder why developers keep choosing the same few options, even when better alternatives exist.